Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

PDF for link building - avoiding duplicate content

-

Hello,

We've got an article that we're turning into a PDF. Both the article and the PDF will be on our site. This PDF is a good, thorough piece of content on how to choose a product.

We're going to strip out all of the links to our in the article and create this PDF so that it will be good for people to reference and even print. Then we're going to do link building through outreach since people will find the article and PDF useful.

My question is, how do I use rel="canonical" to make sure that the article and PDF aren't duplicate content?

Thanks.

-

Hey Bob

I think you should forget about any kind of perceived conventions and have whatever you think works best for your users and goals.

Again, look at unbounce, that is a custom landing page with a homepage link (to share the love) but not the general site navigation.

They also have a footer to do a bit more link love but really, do what works for you.

Forget conventions - do what works!

Hope that helps

Marcus -

I see, thanks! I think it's important not to have the ecommerce navigation on the page promoting the pdf. What would you say is ideal as far as the graphical and navigation components of the page with the PDF on it - what kind of navigation and graphical header should I have on it?

-

Yep, check the HTTP headers with webbug or there are a bunch of browser plugins that will let you see the headers for the document.

That said, I would push to drive the links to the page though rather than the document itself and just create a nice page that houses the document and make that the link target.

You could even make the PDF link only available by email once they have singed up or some such as canonical is only a directive and you would still be better getting those links flooding into a real page on the site.

You could even offer up some HTML to make this easier for folks to link to that linked to your main page. If you take a look at any savvy infographics etc folks will try to draw a link into a page rather than the image itself for the very same reasons.

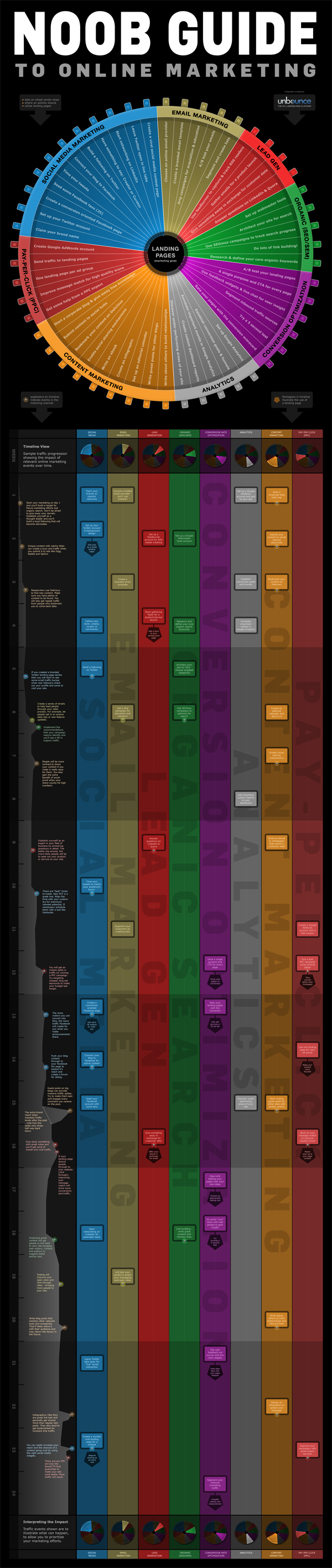

If you look at something like the Noobs Guide to Online Marketing from Unbounce then you will see something like this as the suggested linking code:

[](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

[

](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)[](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

Unbounce – The DIY Landing Page Platform

So, the image is there but the link they are pimping is a standard page:

http://unbounce.com/noob-guide-to-online-marketing-infographic/

They also cheekily add an extra homepage link in as well with some keywords and the brand so if folks don't remove that they still get that benefit.

Ultimately, it means that when links flood into the site they benefit the whole site rather than just promote one PDF.

Just my tuppence!

Marcus -

Thanks for the code Marcus.

Actually, the pdf is what people will be linking to. It's a guide for websites. I think the PDF will be much easier to promote than the article.I assume so anyway.

Is there a way to make sure my canonical code in htaccess is working after I insert the code?

Thanks again,

Bob

-

Hey Bob

There is a much easier way to do this and simply have your PDFs that you don't want indexed in a folder that you block access to in robots.txt. This way you can just drop PDFs into articles and link to them knowing full well these pages will not be indexed.

Assuming you had a PDF called article.pdf in a folder called pdfs/ then the following would prevent indexation.

User-agent: * Disallow: /pdfs/

Or to just block the file itself:

User-agent: *

Disallow: /pdfs/yourfile.pdf Additionally, There is no reason not to add the canonical link as well and if you find people are linking directly to the PDF then having this would ensure that the equity associated with those links was correctly attributed to the parent page (always a good thing).Header add Link '<http: www.url.co.uk="" pdfs="" article.html="">; </http:> rel="canonical"'

Generally, there are better ways to block indexation than with robots.txt but in the case of PDFs, we really don't want these files indexed as they make for such poor landing pages (no navigation) and we certainly want to remove any competition or duplication between the page and the PDF so in this case, it makes for a quick, painless and suitable solution.

Hope that helps!

Marcus -

Thanks ThompsonPaul,

Say the pdf is located at

domain.com/pdfs/white-papers.pdf

and the article that I want to rank is at

domain.com/articles/article.html

do I simply add this to my htaccess file?:

Header add Link "<http: www.domain.com="" articles="" article.html="">; rel="canonical""</http:>

-

You can insert the canonical header link using your site's .htaccess file, Bob. I'm sure Hostgator provides access to the htaccess file through ftp (sometimes you have to turn on "show hidden files") or through the file manager built into your cPanel.

Check tip #2 in this recent SEOMoz blog article for specifics:

seomoz.org/blog/htaccess-file-snippets-for-seosJust remember too - you will want to do the same kind of on-page optimization for the PDF as you do for regular pages.

- Give it a good, descriptive, keyword-appropriate, dash-separated file name. (essential for usability as well, since it will become the title of the icon when saved to someone's desktop)

- Fill out the metadata for the PDF, especially the Title and Description. In Acrobat it's under File -> Properties -> Description tab (to get the meta-description itself, you'll need to click on the Additional Metadata button)

I'd be tempted to build the links to the html page as much as possible as those will directly help ranking, unlike the PDF's inbound links which will have to pass their link juice through the canonical, assuming you're using it. Plus, the visitor will get a preview of the PDF's content and context from the rest of your site which which may increase trust and engender further engagement..

Your comment about links in the PDF got kind of muddled, but you'll definitely want to make certain there are good links and calls to action back to your website within the PDF - preferably on each page. Otherwise there's no clear "next step" for users reading the PDF back to a purchase on your site. Make sure to put Analytics tracking tags on these links so you can assess the value of traffic generated back from the PDF - otherwise the traffic will just appear as Direct in your Analytics.

Hope that all helps;

Paul

-

Can I just use htaccess?

See here: http://www.seomoz.org/blog/how-to-advanced-relcanonical-http-headers

We only have one pdf like this right now and we plan to have no more than five.

Say the pdf is located at

domain.com/pdfs/white-papers.pdf

and the article that I want to rank is at

domain.com/articles/article.pdf

do I simply add this to my htaccess file?:

Header add Link "<http: www.domain.com="" articles="" article.pdf="">; rel="canonical""</http:>

-

How do I know if I can do an HTTP header request? I'm using shared hosting through hostgator.

-

PDF seem to not rank as well as other normal webpages. They still rank do not get me wrong, we have over 100 pdf pages that get traffic for us. The main version is really up to you, what do you want to show in the search results. I think it would be easier to rank for a normal webpage though. If you are doing a rel="canonical" it will pass most of the link juice, not all but most.

-

PDF seem to not rank as well as other normal webpages. They still rank do not get me wrong, we have over 100 pdf pages that get traffic for us. The main version is really up to you, what do you want to show in the search results. I think it would be easier to rank for a normal webpage though. If you are doing a rel="canonical" it will pass most of the link juice, not all but most.

-

Thank you DoRM,

I assume that the PDF is what I want to be the main version since that is what I'll be marketing, but I could be wrong? What if I get backlinks to both pages, will both sets of backlinks count?

-

Indicate the canonical version of a URL by responding with the

Link rel="canonical"HTTP header. Addingrel="canonical"to theheadsection of a page is useful for HTML content, but it can't be used for PDFs and other file types indexed by Google Web Search. In these cases you can indicate a canonical URL by responding with theLink rel="canonical"HTTP header, like this (note that to use this option, you'll need to be able to configure your server):Link: <http: www.example.com="" downloads="" white-paper.pdf="">; rel="canonical"</http:>Google currently supports these link header elements for Web Search only.

You can read more her http://support.google.com/webmasters/bin/answer.py?hl=en&answer=139394

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Same site serving multiple countries and duplicated content

Hello! Though I browse MoZ resources every day, I've decided to directly ask you a question despite the numerous questions (and answers!) about this topic as there are few specific variants each time: I've a site serving content (and products) to different countries built using subfolders (1 subfolder per country). Basically, it looks like this:

Intermediate & Advanced SEO | | GhillC

site.com/us/

site.com/gb/

site.com/fr/

site.com/it/

etc. The first problem was fairly easy to solve:

Avoid duplicated content issues across the board considering that both the ecommerce part of the site and the blog bit are being replicated for each subfolders in their own language. Correct me if I'm wrong but using our copywriters to translate the content and adding the right hreflang tags should do. But then comes the second problem: how to deal with duplicated content when it's written in the same language? E.g. /us/, /gb/, /au/ and so on.

Given the following requirements/constraints, I can't see any positive resolution to this issue:

1. Need for such structure to be maintained (it's not possible to consolidate same language within one single subfolders for example),

2. Articles from one subfolder to another can't be canonicalized as it would mess up with our internal tracking tools,

3. The amount of content being published prevents us to get bespoke content for each region of the world with the same spoken language. Given those constraints, I can't see a way to solve that out and it seems that I'm cursed to live with those duplicated content red flags right up my nose.

Am I right or can you think about anything to sort that out? Many thanks,

Ghill0 -

Deep linking with redirects & building SEO

Hi there. I'm using deep linking with unique URL's that redirect to our website homepage or app (depending on whether the user accesses the link from an iphone or computer) as a way to track attribution and purchases. I'm wondering whether using links that redirect negatively affects our SEO? Is the homepage still building SEO rank despite the redirects? I appreciate your time & thanks for your help.

Intermediate & Advanced SEO | | L_M_SEO0 -

Directory with Duplicate content? what to do?

Moz keeps finding loads of pages with duplicate content on my website. The problem is its a directory page to different locations. E.g if we were a clothes shop we would be listing our locations: www.sitename.com/locations/london www.sitename.com/locations/rome www.sitename.com/locations/germany The content on these pages is all the same, except for an embedded google map that shows the location of the place. The problem is that google thinks all these pages are duplicated content. Should i set a canonical link on every single page saying that www.sitename.com/locations/london is the main page? I don't know if i can use canonical links because the page content isn't identical because of the embedded map. Help would be appreciated. Thanks.

Intermediate & Advanced SEO | | nchlondon0 -

Removing duplicate content

Due to URL changes and parameters on our ecommerce sites, we have a massive amount of duplicate pages indexed by google, sometimes up to 5 duplicate pages with different URLs. 1. We've instituted canonical tags site wide. 2. We are using the parameters function in Webmaster Tools. 3. We are using 301 redirects on all of the obsolete URLs 4. I have had many of the pages fetched so that Google can see and index the 301s and canonicals. 5. I created HTML sitemaps with the duplicate URLs, and had Google fetch and index the sitemap so that the dupes would get crawled and deindexed. None of these seems to be terribly effective. Google is indexing pages with parameters in spite of the parameter (clicksource) being called out in GWT. Pages with obsolete URLs are indexed in spite of them having 301 redirects. Google also appears to be ignoring many of our canonical tags as well, despite the pages being identical. Any ideas on how to clean up the mess?

Intermediate & Advanced SEO | | AMHC0 -

No-index pages with duplicate content?

Hello, I have an e-commerce website selling about 20 000 different products. For the most used of those products, I created unique high quality content. The content has been written by a professional player that describes how and why those are useful which is of huge interest to buyers. It would cost too much to write that high quality content for 20 000 different products, but we still have to sell them. Therefore, our idea was to no-index the products that only have the same copy-paste descriptions all other websites have. Do you think it's better to do that or to just let everything indexed normally since we might get search traffic from those pages? Thanks a lot for your help!

Intermediate & Advanced SEO | | EndeR-0 -

Outbound link to PDF vs outbound link to page

If you're trying to create a site which is an information hub, obviously linking out to authoritative sites is a good idea. However, does linking to a PDF have the same effect? e.g Linking to Google's SEO starter guide PDF, as opposed to linking to a google article on SEO. Thanks!

Intermediate & Advanced SEO | | underscorelive0 -

Duplicate Content From Indexing of non- File Extension Page

Google somehow has indexed a page of mine without the .html extension. so they indexed www.samplepage.com/page, so I am showing duplicate content because Google also see's www.samplepage.com/page.html How can I force google or bing or whoever to only index and see the page including the .html extension? I know people are saying not to use the file extension on pages, but I want to, so please anybody...HELP!!!

Intermediate & Advanced SEO | | WebbyNabler0 -

Increasing Internal Links But Avoiding a Link Farm

I'm looking to create a page about Widgets and all of the more specific names for Widgets we sell: ABC Brand Widgets, XYZ Brand Widgets, Big Widgets, Small Widgets, Green Widgets, Blue Widgets, etc. I'd like my Widget page to give a brief explanation about each kind of Widget with a link deeper into my site that gives more detail and allows you to purchase. The problem is I have a lot of Widgets and this could get messy: ABC Green Widgets, Small XYZ Widgets, many combinations. I can see my Widget page teetering on being a link farm if I start throwing in all of these combos. So where should I stop? How much do I do? I've read more than 100 links on a page being considered a link farm, is that a hardline number or a general guideline?

Intermediate & Advanced SEO | | rball10