Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Page Indexing without content

-

Hello.

I have a problem of page indexing without content. I have website in 3 different languages and 2 of the pages are indexing just fine, but one language page (the most important one) is indexing without content. When searching using site: page comes up, but when searching unique keywords for which I should rank 100% nothing comes up.

This page was indexing just fine and the problem arose couple of days ago after google update finished. Looking further, the problem is language related and every page in the given language that is newly indexed has this problem, while pages that were last crawled around one week ago are just fine.

Has anyone ran into this type of problem?

-

I've encountered a similar indexing issue on my website, https://sunasusa.com/. To resolve it, ensure that the language markup and content accessibility on the affected pages are correct. Review any recent changes and the quality of your content. Utilize Google Search Console for insights, or consider reaching out to Google support for assistance.

-

To remove hacked URLs in bulk from Google's index, clean up your website, secure it, and then use Google Search Console to request removal of the unwanted URLs. Additionally, submit a new sitemap containing only valid URLs to expedite re-indexing.

-

It seems that after a recent Google update, one language version of your website is experiencing indexing issues, while others remain unaffected. This could be due to factors like changes in algorithms or technical issues. To address this:

- Check h reflag tags and content quality.

- Review technical aspects like crawlability and indexing directives. Deck Services in Duluth GA

- Monitor Google Search Console for errors.

- Consider seeking expert assistance if needed.

-

@AtuliSulava Re: Website blog is hacked. Whats the best practice to remove bad urls

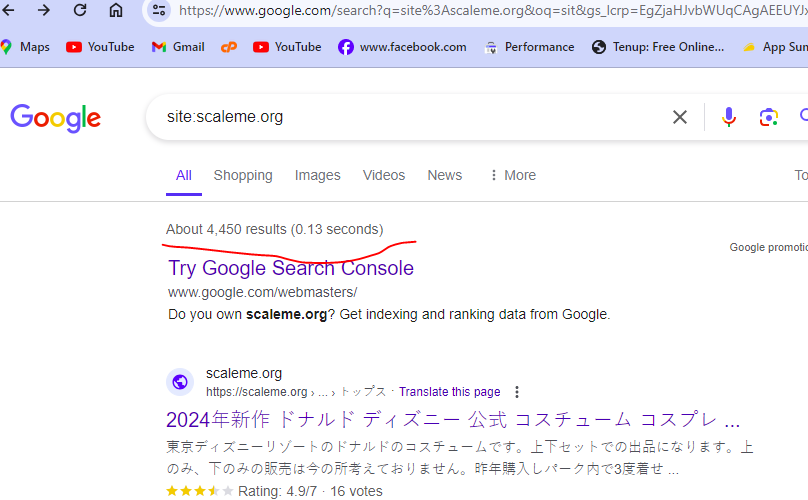

something similar problem also happened to me. many urls are indexed but they have no content in actual. My website(scaleme) was hacked and thousands of URLs with Japanese content were added to my site. These URLs are now indexed by Google. How can I remove them in bulk? (ScreenShoot attached)

I am the owner of this website. thousands of Japanese-language URLs (more than 4400) were added to my site. i am aware with google url remover tool but adding one by one url and submitting for removing is not possible because there are large number of url indexed by google.

Is there a way to find these url , downlaod a list and remove these URLs in bulk? is Moz have any tool to solve this problem?

-

I've faced a similar indexing issue on my website https://mobilespackages.in/ myself. To resolve it, ensure correct language markup and content accessibility on the affected pages. Review recent changes and content quality. Utilize Google Search Console for insights or reach out to Google support.

-

@AtuliSulava It sounds like you're experiencing a frustrating issue with your website's indexing. I have faced this issue. Unfortunately, I have prevented my website from indexing in google by mistake. Here are some steps you can take to troubleshoot and potentially resolve the problem:

Check Robots.txt: Ensure that your site's robots.txt file is not blocking search engine bots from accessing the content on the affected pages.

Review Meta Tags: Check the <meta name="robots" content="noindex"> tag on the affected pages. If present, remove it to allow indexing.

Content Accessibility: Make sure that the content on the affected pages is accessible to search engine bots. Check for any JavaScript, CSS, or other elements that might be blocking access to the content.

Canonical Tags: Verify that the canonical tags on the affected pages are correctly pointing to the preferred version of the page.

Structured Data Markup: Ensure that your pages have correct structured data markup to help search engines understand the content better.

Fetch as Google: Use Google Search Console's "Fetch as Google" tool to see how Googlebot sees your page and if there are any issues with rendering or accessing the content.

Monitor Google Search Console: Keep an eye on Google Search Console for any messages or issues related to indexing and crawlability of your site.

Wait for Re-crawl: Sometimes, Google's indexing issues resolve themselves over time as the search engine re-crawls and re-indexes your site. If the problem persists, consider requesting a re-crawl through Google Search Console.

If the issue continues, it might be beneficial to seek help from a professional SEO consultant who can perform a detailed analysis of your website and provide specific recommendations tailored to your situation. -

@AtuliSulava Perhaps indexing of blank pages is prohibited on this site, look for more information on how to check the ban on indexing in which site files...

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Unsolved Website with no backlinks and a DA of 1 ranks first. Why?

https://www.realestatephotos.melbourne does not have any backlinks and has a DA of 1.

Keyword Explorer | | garrypat

This site ranks first for keywords - real estate photography melbourne and property photography melbourne.

Not sure why.

URL contains keywords and site is ok. But other sites with many links and higher DA rank lower. Why? Garry1 -

Footer backlink for/to Web Design Agency

I read some old (10+ years) information on whether footer backlinks from the websites that design agencies build are seen as spammy and potentially cause a negative effect. We have over 150 websites that we have built over the last few years, all with sitewide footer backlinks back to our homepage (designed and managed by COMPANY NAME). Semrush flags some of the links as potential spammy links. What are the current thoughts on this type of footer backlink? Are we better to have 1 dofollow backlink and the rest of the website nofollow from each domain?

Link Building | | MultiAdE1 -

English pages given preference over local language

We recently launched a new design of our website and for SEO purposes we decided to have our website both in English and in Dutch. However, when I look at the rankings in MOZ for many of our keywords, it seems the English pages are being preferred over the Dutch ones. That never used to be the case when we had our website in the old design. It mainly is for pages that have an English keyword attached to them, but even then the Dutch page would just rank. I'm trying to figure out why English pages are being preferred now and whether that could actually damage our rankings, as search engines would prefer copy in the local language. An example is this page: https://www.bluebillywig.com/nl/html5-video-player/ for the keywords "HTML5 player" and "HTML5 video player".

Local SEO | | Billywig0 -

How to rank a website in different countries

I have a website which I want to rank in UK, NZ and AU and I want to keep my domain as .com in all the countries. I have specified the lang=en now what needs to be done to rank one website in 3 different English countries without changing the domain extension i.e. .com.au or .com.nz

SEO Tactics | | Ravi_Rana0 -

Quick Fix to "Duplicate page without canonical tag"?

When we pull up Google Search Console, in the Index Coverage section, under the category of Excluded, there is a sub-category called ‘Duplicate page without canonical tag’. The majority of the 665 pages in that section are from a test environment. If we were to include in the robots.txt file, a wildcard to cover every URL that started with the particular root URL ("www.domain.com/host/"), could we eliminate the majority of these errors? That solution is not one of the 5 or 6 recommended solutions that the Google Search Console Help section text suggests. It seems like a simple effective solution. Are we missing something?

Technical SEO | | CREW-MARKETING1 -

Should I put meta descriptions on pages that are not indexed?

I have multiple pages that I do not want to be indexed (and they are currently not indexed, so that's great). They don't have meta descriptions on them and I'm wondering if it's worth my time to go in and insert them, since they should hypothetically never be shown. Does anyone have any experience with this? Thanks! The reason this is a question is because one member of our team was linking to this page through Facebook to send people to it and noticed random text on the page being pulled in as the description.

Technical SEO | | Viewpoints0 -

How to determine which pages are not indexed

Is there a way to determine which pages of a website are not being indexed by the search engines? I know Google Webmasters has a sitemap area where it tells you how many urls have been submitted and how many are indexed out of those submitted. However, it doesn't necessarily show which urls aren't being indexed.

Technical SEO | | priceseo1 -

What's the difference between a category page and a content page

Hello, Little confused on this matter. From a website architectural and content stand point, what is the difference between a category page and a content page? So lets say I was going to build a website around tea. My home page would be about tea. My category pages would be: White Tea, Black Tea, Oolong Team and British Tea correct? ( I Would write content for each of these topics on their respective category pages correct?) Then suppose I wrote articles on organic white tea, white tea recipes, how to brew white team etc...( Are these content pages?) Do I think link FROM my category page ( White Tea) to my ( Content pages ie; Organic White Tea, white tea receipes etc) or do I link from my content page to my category page? I hope this makes sense. Thanks, Bill

Technical SEO | | wparlaman0