Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Exclude status codes in Screaming Frog

-

I have a very large ecommerce site I'm trying to spider using screaming frog. Problem is I keep hanging even though I have turned off the high memory safeguard under configuration.

The site has approximately 190,000 pages according to the results of a Google site: command.

- The site architecture is almost completely flat. Limiting the search by depth is a possiblity, but it will take quite a bit of manual labor as there are literally hundreds of directories one level below the root.

- There are many, many duplicate pages. I've been able to exclude some of them from being crawled using the exclude configuration parameters.

- There are thousands of redirects. I haven't been able to exclude those from the spider b/c they don't have a distinguishing character string in their URLs.

Does anyone know how to exclude files using status codes? I know that would help.

If it helps, the site is kodylighting.com.

Thanks in advance for any guidance you can provide.

-

Thanks for your help. It literally was just the fact that it had to be done before the crawl began and could not be changed during the crawl. Hopefully this is changed because sometimes during a crawl you find things you want to exclude that you may have not known of their existence before hand.

-

Are you sure it's just on Mac,have you tried on PC? Do you have any other rules in include or perhaps a conflicting rule in exclude? Try running a single exclude rule, also on another small site to test.

Also from support if failing on all fronts:

- Mac version, please make sure you have the most up to date version of the OS which will update Java.

- Please uninstall, then reinstall the spider ensuring you are using the latest version and try again.

To be sure - http://www.youtube.com/watch?v=eOQ1DC0CBNs

-

does the exclude function work on mac. i have tried every possible way to exclude folders and have not been successful while running an analysis

-

That's exactly the problem, the redirects are disbursed randomly throughout the site. Although, and the job's still running, it now appears as though there's almost a 1-2-1 correlation between pages and redirects on the site.

I also heard from Dan Sharp via Twitter. He said "You can't, as we'd have to crawl a URL to see the status code

You can right click and remove after though!"

You can right click and remove after though!"Thanks again Michael. Your thoroughness and follow through is appreciated.

-

Took another look, also looked at documentation/online and don't see any way to exclude URLs from crawl based on response codes. As I see it you would only want to exclude on name or directory as response code is likely to be random throughout a site and impede a thorough crawl.

-

Thank you Michael.

You're right. I was on a 64 bit machine running a 32 bit verson of java. I updated it and the scan has been running for more than 24 hours now without hanging. So thank you.

If anyone else knows of a way to exclude files using status codes I'd still like to learn about it. So far the scan is showing me 20,000 redirected files which I'd just as soon not inventory.

-

I don't think you can filter out on response codes.

However, first I would ensure you are running the right version of Java if you are on a 64bit machine. The 32bit version functions but you cannot increase the memory allocation which is why you could be running into problems. Take a look at http://www.screamingfrog.co.uk/seo-spider/user-guide/general/ under Memory.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

422 vs 404 Status Codes

We work with an automotive industry platform provider and whenever a vehicle is removed from inventory, a 404 error is returned. Being that inventory moves so quickly, we have a host of 404 errors in search console. The fix that the platform provider proposed was to return a 422 status code vs a 404. I'm not familiar with how a 422 may impact our optimization efforts. Is this a good approach, since there is no scalable way to 301 redirect all of those dead inventory pages.

Technical SEO | | AfroSEO0 -

Include or exclude noindex urls in sitemap?

We just added tags to our pages with thin content. Should we include or exclude those urls from our sitemap.xml file? I've read conflicting recommendations.

Technical SEO | | vcj0 -

How to redirect 302 status to 301 status code using wordpress

I just ran the link opportunity option within site explorer and it shows that 31 pages are currently in a 302 status. Should I try to convert the 302's to 301's? And what is the easiest way to do this? I see several wordpress plugins that claim to do 301 redirects but I don't know which to choose. Any help would be greatly appreciated!

Technical SEO | | vmsolu0 -

Some URLs were not accessible to Googlebot due to an HTTP status error.

Hello I'm a seo newbie and some help from the community here would be greatly appreciated. I have submitted the sitemap of my website in google webmasters tools and now I got this warning: "When we tested a sample of the URLs from your Sitemap, we found that some URLs were not accessible to Googlebot due to an HTTP status error. All accessible URLs will still be submitted." How do I fix this? What should I do? Many thanks in advance.

Technical SEO | | GoldenRanking140 -

Does Title Tag location in a page's source code matter?

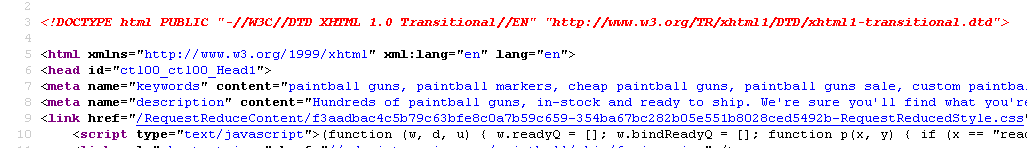

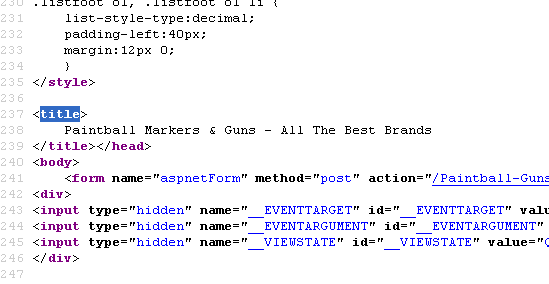

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

Technical SEO | | Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

Screaming Frog Content Showing charset=UTF-8

I am running a site through Screaming Frog and many of the pages under "Content" are reading text/html; charset=UTF-8. Does this harm ones SEO and what does this really mean? I'm running his site along with this competitors and the competitors seems very clean with content pages reading text/html. What does one do to change this if it is a negative thing? Thank you

Technical SEO | | seoessentials0 -

How much impact does bad html coding really have on SEO?

My client has a site that we are trying to optimise. However the code is really pretty bad. There are 205 errors showing when W3C validating. The >title>, , <keywords> tags are appearing twice. There is truly excessive javascript. And everything has been put in tables.</keywords> How much do you think this is really impacting the opportunity to rank? There has been quite a bit of discussion recently along the lines of is on-page SEO impacting anymore. I just want to be sure before I recommend a whole heap of code changes that could cost her a lot - especially if the impact/return could be miniscule. Should it all be cleaned up? Many thanks

Technical SEO | | Chammy0 -

How Add 503 status to IIS 6.0

Hi, Our IS department is bringing down our network for maintenance this weekend for 24 hours. I am worried about search engine implications. all Traffic is being diverted, and the diverted traffic is being sent to another server with IIS 6.0 From all research i have done it appears creating a custom 503 error message in IIS 6 is not possible Source: http://technet.microsoft.com/en-us/library/bb877968.aspx So my question is does anyone have any suggestions on how to do a proper 503 temporarily unavailable in IIS 6.0 with a custom error message? Thanks

Technical SEO | | Jinx146780