Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Not all images indexed in Google

-

Hi all,

Recently, got an unusual issue with images in Google index. We have more than 1,500 images in our sitemap, but according to Search Console only 273 of those are indexed. If I check Google image search directly, I find more images in index, but still not all of them.

For example this post has 28 images and only 17 are indexed in Google image. This is happening to other posts as well.

Checked all possible reasons (missing alt, image as background, file size, fetch and render in Search Console), but none of these are relevant in our case. So, everything looks fine, but not all images are in index.

Any ideas on this issue?

Your feedback is much appreciated, thanks

-

Fetching, rendering, caching and indexing are all different. Sometimes they're all part of the same process, sometimes not. When Google 'indexes' images, that's primarily for its image search engine (Google Images). 'Indexing' something means that Google is listing that resource within its own search results for one reason or another. For the same reasons that Google rarely indexes all of your web-pages, Google also rarely indexes all of your images.

That doesn't mean that Google 'can't see' your images and has an imperfect view of your web-page. It simply means that Google does not believe the image which you have uploaded are 'worthy' enough to be served to an end-user who is performing a certain search on Google images. If you think that gaining normal web rankings is tricky, remember that most users only utilise Google images for certain (specific) reasons. Maybe they're trying to find a meme to add to their post on a form thread or as a comment on a Social network. Maybe they're looking for PNG icons to add into their PowerPoint presentations.

In general, images from the commercial web are... well, they're commercially driven (usually). When was the last time you expressedly set out to search for Ads to look at on Google images? Never? Ok then.

First Google will fetch a page or resource by visiting that page or resource's URL. If the resource or web-page is of moderate to high value, Google may then render the page or resource (Google doesn't always do this, but usually it's to get a good view of a page on the web which is important - yet which is heavily modified by something like JS or AJAX - and thus all the info isn't in the basic 'source code' / view-source).

Following this, Google may decide to cache the web-page or resource. Finally, if the page or resource is deemed worthy enough and Google's algorithm(s) decide that it could potentially satisfy a certain search query (or array thereof) - the resource or page may be indexed. All of this can occur in various patterns, e.g: indexing a resource without caching it or caching a resource without indexing it (there are many reasons for all of this which I won't get into now).

On the commercial web, many images are stock or boiler-plate visuals from suppliers. If Google already has the image you are supplying indexed at a higher resolution or at superior quality (factoring compression) and if your site is not a 'main contender' in terms of popularity and trust metrics, Google probably won't index that image on your site. Why would Google do so? It would just mean that when users performed an image search, they would see large panes of results which were all the same image. Users only have so much screen real-estate (especially with the advent of mobile browsing popularity). Seeing loads of the same picture at slightly different resolutions would just be annoying. People want to see a variety, a spread of things! **That being said **- your images are lush and I don't think they're stock rips!

If some images on your page, post or website are not indexed - it's not necessarily an 'issue' or 'error'.

Looking at the post you linked to: https://flothemes.com/best-lightroom-presets-photogs/

I can see that it sits on the "flothemes.com" domain. It has very strong link and trust metrics:

Ahrefs - Domain rating 83

Moz - Domain Authority - 62

As such, you'd think that most of these images would be unique (I don't have time to do a reverse image search on all of them) - also because the content seems really well done. I am pretty confident (though not certain) that quality and duplication are probably not to blame in this instance.

That makes me think, hmm maybe some of the images don't meet Google's compression standards.

Check out these results (https://gtmetrix.com/reports/flothemes.com/xZARSfi5) for the page / post you referenced, on GTMetrix (I find it superior to Google's Page-Speed Insights) and click on the "Waterfall" tab.

You can see that some of the image files have pretty lard 'bars' in terms of the total time it took to load in those individual resources. The main offenders are this image: https://l5vd03xwb5125jimp1nwab7r-wpengine.netdna-ssl.com/wp-content/uploads/2016/01/PhilChester-Portfolio-40.jpg (over 2 seconds to pull in by itself) and this one: https://l5vd03xwb5125jimp1nwab7r-wpengine.netdna-ssl.com/wp-content/uploads/2017/04/Portra-1601-Digital-2.png (around 1.7 seconds to pull in)

Check out the resource URLs. They're being pulled into your page, but they're not hosted on your website. As such - how could Google index those images for your site when they're pulled in externally? Maybe there's some CDN stuff going on here. Maybe Google is indexing some images on the CDN because it's faster and not from your base-domain. This really needs looking into in a lot more detail, but I smell the tails of something interesting there.

If images are deemed to be uncompressed or if their resolution is just way OTT (such that most users would never need even half of the full deployment resolution) - Google won't index those images. Why? Well they don't want Google Images to become a lag-fest I guess!

**Your main issue is that you are not serving 'scaled' images **(or apparently, optimising them). On that same GTMetrix report, check out the "PageSpeed" tab. Yeah, you scored an F by the way (that's a fail) and it's mainly down to your image deployment.

Google thinks one or more of the following:

- You haven't put enough effort into optimising some of your images

- Some of your images are not worth indexing or it can find them somewhere else

- Google is indexing some of the images from your CDN instead of your base domain

- Google is having trouble indexing images for your domain, which are permanently or temporarily stored off-site (and the interference is causing Google to just give up)

I know there's a lot to think about here, but I hope I have at least put you on the 'trail' a reasonable solution

This was fun to examine, so thanks for the interesting question!

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Removing a site from Google index with no index met tags

Hi there! I wanted to remove a duplicated site from the google index. I've read that you can do this by removing the URL from Google Search console and, although I can't find it in Google Search console, Google keeps on showing the site on SERPs. So I wanted to add a "no index" meta tag to the code of the site however I've only found out how to do this for individual pages, can you do the same for a entire site? How can I do it? Thank you for your help in advance! L

Technical SEO | | Chris_Wright1 -

Should search pages be indexed?

Hey guys, I've always believed that search pages should be no-indexed but now I'm wondering if there is an argument to index them? Appreciate any thoughts!

Technical SEO | | RebekahVP0 -

Google has deindexed a page it thinks is set to 'noindex', but is in fact still set to 'index'

A page on our WordPress powered website has had an error message thrown up in GSC to say it is included in the sitemap but set to 'noindex'. The page has also been removed from Google's search results. Page is https://www.onlinemortgageadvisor.co.uk/bad-credit-mortgages/how-to-get-a-mortgage-with-bad-credit/ Looking at the page code, plus using Screaming Frog and Ahrefs crawlers, the page is very clearly still set to 'index'. The SEO plugin we use has not been changed to 'noindex' the page. I have asked for it to be reindexed via GSC but I'm concerned why Google thinks this page was asked to be noindexed. Can anyone help with this one? Has anyone seen this before, been hit with this recently, got any advice...?

Technical SEO | | d.bird0 -

What are best options for website built with navigation drop-down menus in JavaScript, to get those menus indexed by Google?

This concerns f5.com, a large website with navigation menus that drop down when hovered over. The sub nav items (example: “DDoS Protection”) are not cached by Google and therefore do not distribute internal links properly to help those sub-pages rank well. Best option naturally is to change the nav menus from JS to CSS but barring that, is there another option? Will Schema SiteNavigationElement work as an alternate?

Technical SEO | | CarlLarson0 -

My video sitemap is not being index by Google

Dear friends, I have a videos portal. I created a video sitemap.xml and submit in to GWT but after 20 days it has not been indexed. I have verified in bing webmaster as well. All videos are dynamically being fetched from server. My all static pages have been indexed but not videos. Please help me where am I doing the mistake. There are no separate pages for single videos. All the content is dynamically coming from server. Please help me. your answers will be more appreciated................. Thanks

Technical SEO | | docbeans0 -

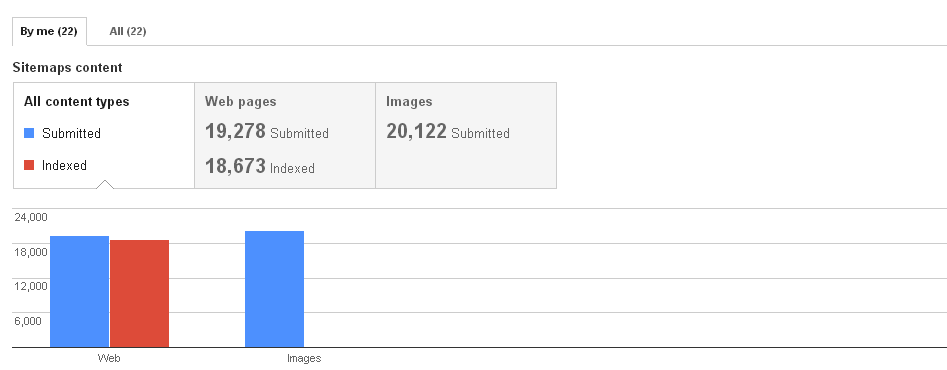

Image Indexing Issue by Google

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

Correct linking to the /index of a site and subfolders: what's the best practice? link to: domain.com/ or domain.com/index.html ?

Dear all, starting with my .htaccess file: RewriteEngine On

Technical SEO | | inlinear

RewriteCond %{HTTP_HOST} ^www.inlinear.com$ [NC]

RewriteRule ^(.*)$ http://inlinear.com/$1 [R=301,L] RewriteCond %{THE_REQUEST} ^./index.html

RewriteRule ^(.)index.html$ http://inlinear.com/ [R=301,L] 1. I redirect all URL-requests with www. to the non www-version...

2. all requests with "index.html" will be redirected to "domain.com/" My questions are: A) When linking from a page to my frontpage (home) the best practice is?: "http://domain.com/" the best and NOT: "http://domain.com/index.php" B) When linking to the index of a subfolder "http://domain.com/products/index.php" I should link also to: "http://domain.com/products/" and not put also the index.php..., right? C) When I define the canonical ULR, should I also define it just: "http://domain.com/products/" or in this case I should link to the definite file: "http://domain.com/products**/index.php**" Is A) B) the best practice? and C) ? Thanks for all replies! 🙂

Holger0 -

How to stop my webmail pages not to be indexed on Google ??

when i did a search in google for Site:mywebsite.com , for a list of pages indexed. Surprisingly the following come up " Webmail - Login " Although this is associated with the domain , this is a completely different server , this the rackspace email server browser interface I am sure that there is nothing on the website that links or points to this.

Technical SEO | | UIPL

So why is Google indexing it ? & how do I get it out of there. I tried in webmaster tool but I could not , as it seems like a sub-domain. Any ideas ? Thanks Naresh Sadasivan0