Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Good alternatives to Xenu's Link Sleuth and AuditMyPc.com Sitemap Generator

-

I am working on scraping title tags from websites with 1-5 million pages. Xenu's Link Sleuth seems to be the best option for this, at this point. Sitemap Generator from AuditMyPc.com seems to be working too, but it starts handing up, when a sitemap file, the tools is working on,becomes too large. So basically, the second one looks like it wont be good for websites of this size. I know that Scrapebox can scrape title tags from list of url, but this is not needed, since this comes with both of the above mentioned tools.

I know about DeepCrawl.com also, but this one is paid, and it would be very expensive with this amount of pages and websites too (5 million ulrs is $1750 per month, I could get a better deal on multiple websites, but this obvioulsy does not make sense to me, it needs to be free, more or less). Seo Spider from Screaming Frog is not good for large websites.

So, in general, what is the best way to work on something like this, also time efficient. Are there any other options for this?

Thanks.

-

import.io and it's free

-

Another idea that I have here, is to look for sitemaps of these websites. There may be a way to get a list of all the urls, right away, without crawling. Look at /robots.txt, /sitemap.xml, search for sitemap in Google, things like that. If there is urls, title tags can be scraped with Scrapebox, and as far as their website is saying, it can be done relatively fast.

# # Edit:

I had somebody suggesting http://inspyder.com, around $40 and free trial. May be a good option too.

-

So there is probably no way to tell, whether I have all the urls of the site, or what percentage I have... I may have 80 or even less percent of the total site, and not know about it, I would assume. This is one of the parts of working on the sites (I've never needed it, but I am working on something like this now), and there is no good tool, which would do the work.

I have a website with 33,500,000 pages. I've been running the tool for close to 5 hours, and I have around 125,000 urls, so far. This means, that it would take 1340 hours to do the entire site. This is close to two months of running the program 24 hours a day, which does not make sense. And besides that I was planning to do it on up to 100 sites. Definitely not something that can be done, and I would say that it should be possible, software-wise.

I will try your method, and see what I will get. I dont have too much time for experimenting with it too. I need to work, and generate results...

# # Edit

I will now how the number of urls compares to the 33,500,000 figure, obviously, but whats indexed in Google is not necessarily the complete website too. The method that you are suggesting is not perfect, but I dont have two months to wait too, obviously...

-

You will crawl some of the same URLs - that's why you remove duplicates at the end. There's no way to keep it from re-crawling some of the URLs, as far as I know.

But yes, get it to recognize 600-800k URLs and then split the file. (Export, put the links in as an html file and start over.) Let me break it down the best I can:

-

Crawl your main (seed) URL until you've recognized 800k.

-

Pause/stop and then export the results.

-

Create an html file with the URLs from the export - separated 50k to 100k at a time.

-

Recrawl those files in Xenu with the "file" option.

-

Build them back up to 800k or so recognized URLs again and repeat.

After a few (4-6) iterations of this, you'll have most URLs crawled on most sites no matter how large. Doing it this way, I think you could expect to crawl about 2-3 million URLs a day. If you really paid attention to it and created smaller files but ran them more frequently, you could get 4-5 million, I think. I've crawled close to that in a day for a scrape once.

-

-

Thanks. It is good to hear, that there is a way to do, of what I am trying to do, especially on 50 or more sites, large.

I've been running Xenu on a 33,500,000 pages site for a little over 4 hours and 15 minutes, and I have something like this, so far:

Close to 500,000 urls recognized, and only 115,000 processed, it looks like. I am manually saving it to a file, every now and then, as there is no way to auto save, as far as I was checking (there could be though, I am not sure, there is no too many options there).

I am not sure, based on your advice, how I could speed it up this process. Should I wait from this point, then stop the program, and divide the file into 8 separate files, and load it to the program separately? Then the program will recognize these separate files as one, and it will continue crawling for new urls? If possible, please give better information on how this would need to be done, as I dont fully understand. I also dont see how this could do this large website in one day, or lets say even five days...

# # Edit:

I actually got to understanding what you mean, get 8 separate files (can be 6 or, lets say 10) and run them all at the same time. But still, how will the program know not to crawl and download the same urls, on all the files? In general, I would like to ask for better explanation, on how this needs to be done.

Thanks.

-

Let Xenu crawl until you have about 800k links. Then export the file and add it back as 8 x 100k lists of URLs. You can then run it again and repeat the process. By the time you have split it 4-5 times, you can then export everything, put it into one file and remove duplicates.

Xenu, done this way, with 100 threads, is probably the fastest way to do the whole thing. I think you could get the 5M results in under 1 day of work this way.

-

Ok. So it looks like Screaming Frog may be a good way to go too, if not better. Xenu is free, which is a big plus. On the top of that Creaming Frog's Seo Spider is based on a yearly subscription, and not a one time fee. For those who dont know, there is a version of Xenu for large sites, which can be found on their website. They also have a support group at groups.yahoo.com (find it through there), I am not sure if it is still active.

Xenu upgraded to the version for larger sites may be the best way to go, since it is free. I've been testing AuditMyPc.com Sitemap Creator and the better version of Xenu, and the first one already hanged up (I discontinued using it). They were both collecting the info at about the same speed, but Xenu is working better (does not hang up, looks like it should be good). Either way, this will take quite a lot of time, with it, as previously mentioned.

-

I agree with Moosa and Danny - in terms of I use Screaming Frog (full paid version) on a stripped down windows machine with an SSD and 16GB of performance RAM. I have also download the 64 bit version of Java and increased the memory allocation for Screaming Frog to 12GB (default limit is 512mb) - here's how - http://www.screamingfrog.co.uk/seo-spider/user-guide/general/ (look at the section Increasing Memory on Windows 32 & 64-bit)

I did this as I was having issues crawling a large site - after I put this system in place it eats any site I have thrown at it so far so it works well for me personally. In terms of speed of crawl large sites such as you mention will still take a while - you can set crawl speed in Screaming Frog, but you need to be careful as you can overload the server of the site you are crawling and cause issues...

Another option would be to buy a server and configure it for Screaming Frog and other tools you may use - this gives you options to grow the system as your needs grow. It all depends on budget and how often you crawl large sites - obviously buying a server such as a windows instance on Amazon EC2 will cost more in the long run but it takes the strain away from your own systems and networks plus you should effectively never hit capacity on the server as you can just upgrade. It will also allow you to remote desktop in on whatever system you use - yes even a Mac

Hope this helps

-

I believe when you are talking about 1 to 5 million URLs it is going to take time no matter what tool you use but if you ask me screaming frog is a better tool and if you have a paid version of it you still can crawl websites with few million URLs in it.

Xenu is not a bad choice either but it’s kind of confusing and there is a possibility that it can broke.

Hope this helps!

-

I was facing similar issue with huge sites, that have over 100s of thousands of pages. But ever since I upgraded my computer with RAM and SSD it run way better on huge sites as well. I tried several scrappers and I still believe Xenu is the best one and most recommended by SEO experts. Also you might want to check this post on Moz Blog about Xenu's

http://moz.com/blog/xenu-link-sleuth-more-than-just-a-broken-links-finderGood luck!

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Google has deindexed a page it thinks is set to 'noindex', but is in fact still set to 'index'

A page on our WordPress powered website has had an error message thrown up in GSC to say it is included in the sitemap but set to 'noindex'. The page has also been removed from Google's search results. Page is https://www.onlinemortgageadvisor.co.uk/bad-credit-mortgages/how-to-get-a-mortgage-with-bad-credit/ Looking at the page code, plus using Screaming Frog and Ahrefs crawlers, the page is very clearly still set to 'index'. The SEO plugin we use has not been changed to 'noindex' the page. I have asked for it to be reindexed via GSC but I'm concerned why Google thinks this page was asked to be noindexed. Can anyone help with this one? Has anyone seen this before, been hit with this recently, got any advice...?

Technical SEO | | d.bird0 -

My Website's Home Page is Missing on Google SERP

Hi All, I have a WordPress website which has about 10-12 pages in total. When I search for the brand name on Google Search, the home page URL isn't appearing on the result pages while the rest of the pages are appearing. There're no issues with the canonicalization or meta titles/descriptions as such. What could possibly the reason behind this aberration? Looking forward to your advice! Cheers

Technical SEO | | ugorayan0 -

Getting high priority issue for our xxx.com and xxx.com/home as duplicate pages and duplicate page titles can't seem to find anything that needs to be corrected, what might I be missing?

I am getting high priority issue for our xxx.com and xxx.com/home as reporting both duplicate pages and duplicate page titles on crawl results, I can't seem to find anything that needs to be corrected, what am I be missing? Has anyone else had a similar issue, how was it corrected?

Technical SEO | | tgwebmaster0 -

Does Title Tag location in a page's source code matter?

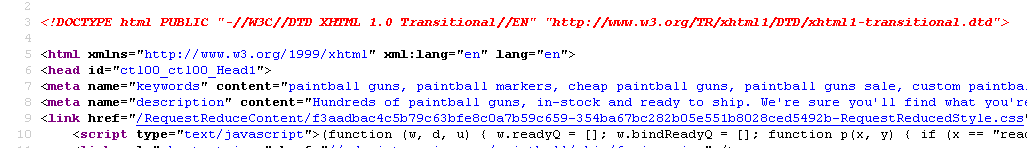

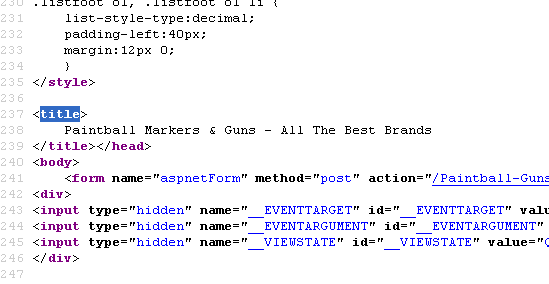

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

Technical SEO | | Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

Http to https - is a '302 object moved' redirect losing me link juice?

Hi guys, I'm looking at a new site that's completely under https - when I look at the http variant it redirects to the https site with "302 object moved" within the code. I got this by loading the http and https variants into webmaster tools as separate sites, and then doing a 'fetch as google' across both. There is some traffic coming through the http option, and as people start linking to the new site I'm worried they'll link to the http variant, and the 302 redirect to the https site losing me ranking juice from that link. Is this a correct scenario, and if so, should I prioritise moving the 302 to a 301? Cheers, Jez

Technical SEO | | jez0000 -

Structuring URL's for better SEO

Hello, We were rolling our fresh urls for our new service website. Currently we have our structure as www.practo.com/health/dental/clinic/bangalore We like to have it as www.practo.com/health/dental-clinic-bangalore Can someone advice us better which one of the above structure would work out better and why? Should this be a focus of attention while going ahead since this is like a search engine platform for patients looking out for actual doctors. Thanks, Aditya

Technical SEO | | shanky10 -

Google's "cache:" operator is returning a 404 error.

I'm doing the "cache:" operator on one of my sites and Google is returning a 404 error. I've swapped out the domain with another and it works fine. Has anyone seen this before? I'm wondering if G is crawling the site now? Thx!

Technical SEO | | AZWebWorks0 -

What's the difference between a category page and a content page

Hello, Little confused on this matter. From a website architectural and content stand point, what is the difference between a category page and a content page? So lets say I was going to build a website around tea. My home page would be about tea. My category pages would be: White Tea, Black Tea, Oolong Team and British Tea correct? ( I Would write content for each of these topics on their respective category pages correct?) Then suppose I wrote articles on organic white tea, white tea recipes, how to brew white team etc...( Are these content pages?) Do I think link FROM my category page ( White Tea) to my ( Content pages ie; Organic White Tea, white tea receipes etc) or do I link from my content page to my category page? I hope this makes sense. Thanks, Bill

Technical SEO | | wparlaman0