Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

PDF for link building - avoiding duplicate content

-

Hello,

We've got an article that we're turning into a PDF. Both the article and the PDF will be on our site. This PDF is a good, thorough piece of content on how to choose a product.

We're going to strip out all of the links to our in the article and create this PDF so that it will be good for people to reference and even print. Then we're going to do link building through outreach since people will find the article and PDF useful.

My question is, how do I use rel="canonical" to make sure that the article and PDF aren't duplicate content?

Thanks.

-

Hey Bob

I think you should forget about any kind of perceived conventions and have whatever you think works best for your users and goals.

Again, look at unbounce, that is a custom landing page with a homepage link (to share the love) but not the general site navigation.

They also have a footer to do a bit more link love but really, do what works for you.

Forget conventions - do what works!

Hope that helps

Marcus -

I see, thanks! I think it's important not to have the ecommerce navigation on the page promoting the pdf. What would you say is ideal as far as the graphical and navigation components of the page with the PDF on it - what kind of navigation and graphical header should I have on it?

-

Yep, check the HTTP headers with webbug or there are a bunch of browser plugins that will let you see the headers for the document.

That said, I would push to drive the links to the page though rather than the document itself and just create a nice page that houses the document and make that the link target.

You could even make the PDF link only available by email once they have singed up or some such as canonical is only a directive and you would still be better getting those links flooding into a real page on the site.

You could even offer up some HTML to make this easier for folks to link to that linked to your main page. If you take a look at any savvy infographics etc folks will try to draw a link into a page rather than the image itself for the very same reasons.

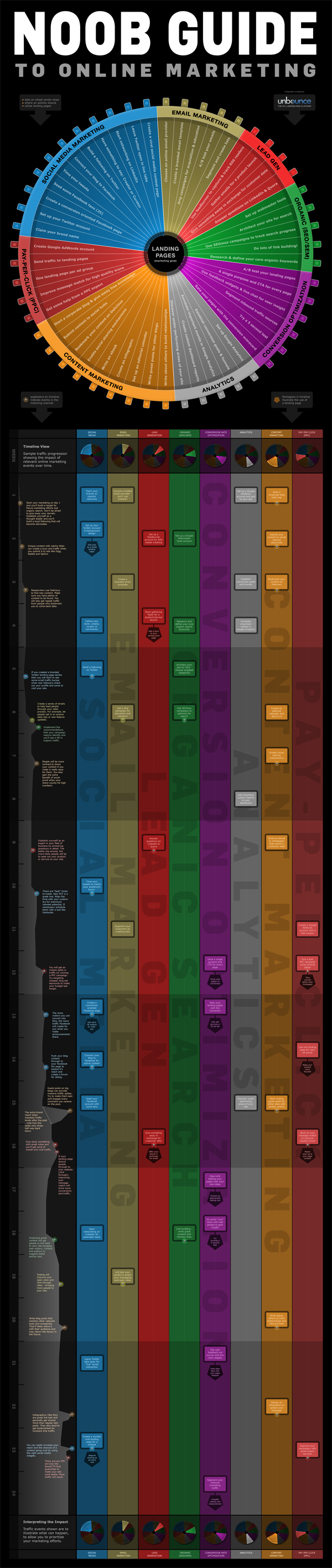

If you look at something like the Noobs Guide to Online Marketing from Unbounce then you will see something like this as the suggested linking code:

[](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

[

](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)[](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

Unbounce – The DIY Landing Page Platform

So, the image is there but the link they are pimping is a standard page:

http://unbounce.com/noob-guide-to-online-marketing-infographic/

They also cheekily add an extra homepage link in as well with some keywords and the brand so if folks don't remove that they still get that benefit.

Ultimately, it means that when links flood into the site they benefit the whole site rather than just promote one PDF.

Just my tuppence!

Marcus -

Thanks for the code Marcus.

Actually, the pdf is what people will be linking to. It's a guide for websites. I think the PDF will be much easier to promote than the article.I assume so anyway.

Is there a way to make sure my canonical code in htaccess is working after I insert the code?

Thanks again,

Bob

-

Hey Bob

There is a much easier way to do this and simply have your PDFs that you don't want indexed in a folder that you block access to in robots.txt. This way you can just drop PDFs into articles and link to them knowing full well these pages will not be indexed.

Assuming you had a PDF called article.pdf in a folder called pdfs/ then the following would prevent indexation.

User-agent: * Disallow: /pdfs/

Or to just block the file itself:

User-agent: *

Disallow: /pdfs/yourfile.pdf Additionally, There is no reason not to add the canonical link as well and if you find people are linking directly to the PDF then having this would ensure that the equity associated with those links was correctly attributed to the parent page (always a good thing).Header add Link '<http: www.url.co.uk="" pdfs="" article.html="">; </http:> rel="canonical"'

Generally, there are better ways to block indexation than with robots.txt but in the case of PDFs, we really don't want these files indexed as they make for such poor landing pages (no navigation) and we certainly want to remove any competition or duplication between the page and the PDF so in this case, it makes for a quick, painless and suitable solution.

Hope that helps!

Marcus -

Thanks ThompsonPaul,

Say the pdf is located at

domain.com/pdfs/white-papers.pdf

and the article that I want to rank is at

domain.com/articles/article.html

do I simply add this to my htaccess file?:

Header add Link "<http: www.domain.com="" articles="" article.html="">; rel="canonical""</http:>

-

You can insert the canonical header link using your site's .htaccess file, Bob. I'm sure Hostgator provides access to the htaccess file through ftp (sometimes you have to turn on "show hidden files") or through the file manager built into your cPanel.

Check tip #2 in this recent SEOMoz blog article for specifics:

seomoz.org/blog/htaccess-file-snippets-for-seosJust remember too - you will want to do the same kind of on-page optimization for the PDF as you do for regular pages.

- Give it a good, descriptive, keyword-appropriate, dash-separated file name. (essential for usability as well, since it will become the title of the icon when saved to someone's desktop)

- Fill out the metadata for the PDF, especially the Title and Description. In Acrobat it's under File -> Properties -> Description tab (to get the meta-description itself, you'll need to click on the Additional Metadata button)

I'd be tempted to build the links to the html page as much as possible as those will directly help ranking, unlike the PDF's inbound links which will have to pass their link juice through the canonical, assuming you're using it. Plus, the visitor will get a preview of the PDF's content and context from the rest of your site which which may increase trust and engender further engagement..

Your comment about links in the PDF got kind of muddled, but you'll definitely want to make certain there are good links and calls to action back to your website within the PDF - preferably on each page. Otherwise there's no clear "next step" for users reading the PDF back to a purchase on your site. Make sure to put Analytics tracking tags on these links so you can assess the value of traffic generated back from the PDF - otherwise the traffic will just appear as Direct in your Analytics.

Hope that all helps;

Paul

-

Can I just use htaccess?

See here: http://www.seomoz.org/blog/how-to-advanced-relcanonical-http-headers

We only have one pdf like this right now and we plan to have no more than five.

Say the pdf is located at

domain.com/pdfs/white-papers.pdf

and the article that I want to rank is at

domain.com/articles/article.pdf

do I simply add this to my htaccess file?:

Header add Link "<http: www.domain.com="" articles="" article.pdf="">; rel="canonical""</http:>

-

How do I know if I can do an HTTP header request? I'm using shared hosting through hostgator.

-

PDF seem to not rank as well as other normal webpages. They still rank do not get me wrong, we have over 100 pdf pages that get traffic for us. The main version is really up to you, what do you want to show in the search results. I think it would be easier to rank for a normal webpage though. If you are doing a rel="canonical" it will pass most of the link juice, not all but most.

-

PDF seem to not rank as well as other normal webpages. They still rank do not get me wrong, we have over 100 pdf pages that get traffic for us. The main version is really up to you, what do you want to show in the search results. I think it would be easier to rank for a normal webpage though. If you are doing a rel="canonical" it will pass most of the link juice, not all but most.

-

Thank you DoRM,

I assume that the PDF is what I want to be the main version since that is what I'll be marketing, but I could be wrong? What if I get backlinks to both pages, will both sets of backlinks count?

-

Indicate the canonical version of a URL by responding with the

Link rel="canonical"HTTP header. Addingrel="canonical"to theheadsection of a page is useful for HTML content, but it can't be used for PDFs and other file types indexed by Google Web Search. In these cases you can indicate a canonical URL by responding with theLink rel="canonical"HTTP header, like this (note that to use this option, you'll need to be able to configure your server):Link: <http: www.example.com="" downloads="" white-paper.pdf="">; rel="canonical"</http:>Google currently supports these link header elements for Web Search only.

You can read more her http://support.google.com/webmasters/bin/answer.py?hl=en&answer=139394

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Duplicate Content through 'Gclid'

Hello, We've had the known problem of duplicate content through the gclid parameter caused by Google Adwords. As per Google's recommendation - we added the canonical tag to every page on our site so when the bot came to each page they would go 'Ah-ha, this is the original page'. We also added the paramter to the URL parameters in Google Wemaster Tools. However, now it seems as though a canonical is automatically been given to these newly created gclid pages; below https://www.google.com.au/search?espv=2&q=site%3Awww.mypetwarehouse.com.au+inurl%3Agclid&oq=site%3A&gs_l=serp.3.0.35i39l2j0i67l4j0i10j0i67j0j0i131.58677.61871.0.63823.11.8.3.0.0.0.208.930.0j3j2.5.0....0...1c.1.64.serp..8.3.419.nUJod6dYZmI Therefore these new pages are now being indexed, causing duplicate content. Does anyone have any idea about what to do in this situation? Thanks, Stephen.

Intermediate & Advanced SEO | | MyPetWarehouse0 -

Case Sensitive URLs, Duplicate Content & Link Rel Canonical

I have a site where URLs are case sensitive. In some cases the lowercase URL is being indexed and in others the mixed case URL is being indexed. This is leading to duplicate content issues on the site. The site is using link rel canonical to specify a preferred URL in some cases however there is no consistency whether the URLs are lowercase or mixed case. On some pages the link rel canonical tag points to the lowercase URL, on others it points to the mixed case URL. Ideally I'd like to update all link rel canonical tags and internal links throughout the site to use the lowercase URL however I'm apprehensive! My question is as follows: If I where to specify the lowercase URL across the site in addition to updating internal links to use lowercase URLs, could this have a negative impact where the mixed case URL is the one currently indexed? Hope this makes sense! Dave

Intermediate & Advanced SEO | | allianzireland0 -

No-index pages with duplicate content?

Hello, I have an e-commerce website selling about 20 000 different products. For the most used of those products, I created unique high quality content. The content has been written by a professional player that describes how and why those are useful which is of huge interest to buyers. It would cost too much to write that high quality content for 20 000 different products, but we still have to sell them. Therefore, our idea was to no-index the products that only have the same copy-paste descriptions all other websites have. Do you think it's better to do that or to just let everything indexed normally since we might get search traffic from those pages? Thanks a lot for your help!

Intermediate & Advanced SEO | | EndeR-0 -

Is a different location in page title, h1 title, and meta description enough to avoid Duplicate Content concern?

I have a dynamic website which will have location-based internal pages that will have a <title>and <h1> title, and meta description tag that will include the subregion of a city. Each page also will have an 'info' section describing the generic product/service offered which will also include the name of the subregion. The 'specific product/service content will be dynamic but in some cases will be almost identical--ie subregion A may sometimes have the same specific content result as subregion B. Will the difference of just the location put in each of the above tags be enough for me to avoid a Duplicate Content concern?</p></title>

Intermediate & Advanced SEO | | couponguy0 -

Avoiding Duplicate Content with Used Car Listings Database: Robots.txt vs Noindex vs Hash URLs (Help!)

Hi Guys, We have developed a plugin that allows us to display used vehicle listings from a centralized, third-party database. The functionality works similar to autotrader.com or cargurus.com, and there are two primary components: 1. Vehicle Listings Pages: this is the page where the user can use various filters to narrow the vehicle listings to find the vehicle they want.

Intermediate & Advanced SEO | | browndoginteractive

2. Vehicle Details Pages: this is the page where the user actually views the details about said vehicle. It is served up via Ajax, in a dialog box on the Vehicle Listings Pages. Example functionality: http://screencast.com/t/kArKm4tBo The Vehicle Listings pages (#1), we do want indexed and to rank. These pages have additional content besides the vehicle listings themselves, and those results are randomized or sliced/diced in different and unique ways. They're also updated twice per day. We do not want to index #2, the Vehicle Details pages, as these pages appear and disappear all of the time, based on dealer inventory, and don't have much value in the SERPs. Additionally, other sites such as autotrader.com, Yahoo Autos, and others draw from this same database, so we're worried about duplicate content. For instance, entering a snippet of dealer-provided content for one specific listing that Google indexed yielded 8,200+ results: Example Google query. We did not originally think that Google would even be able to index these pages, as they are served up via Ajax. However, it seems we were wrong, as Google has already begun indexing them. Not only is duplicate content an issue, but these pages are not meant for visitors to navigate to directly! If a user were to navigate to the url directly, from the SERPs, they would see a page that isn't styled right. Now we have to determine the right solution to keep these pages out of the index: robots.txt, noindex meta tags, or hash (#) internal links. Robots.txt Advantages: Super easy to implement Conserves crawl budget for large sites Ensures crawler doesn't get stuck. After all, if our website only has 500 pages that we really want indexed and ranked, and vehicle details pages constitute another 1,000,000,000 pages, it doesn't seem to make sense to make Googlebot crawl all of those pages. Robots.txt Disadvantages: Doesn't prevent pages from being indexed, as we've seen, probably because there are internal links to these pages. We could nofollow these internal links, thereby minimizing indexation, but this would lead to each 10-25 noindex internal links on each Vehicle Listings page (will Google think we're pagerank sculpting?) Noindex Advantages: Does prevent vehicle details pages from being indexed Allows ALL pages to be crawled (advantage?) Noindex Disadvantages: Difficult to implement (vehicle details pages are served using ajax, so they have no tag. Solution would have to involve X-Robots-Tag HTTP header and Apache, sending a noindex tag based on querystring variables, similar to this stackoverflow solution. This means the plugin functionality is no longer self-contained, and some hosts may not allow these types of Apache rewrites (as I understand it) Forces (or rather allows) Googlebot to crawl hundreds of thousands of noindex pages. I say "force" because of the crawl budget required. Crawler could get stuck/lost in so many pages, and my not like crawling a site with 1,000,000,000 pages, 99.9% of which are noindexed. Cannot be used in conjunction with robots.txt. After all, crawler never reads noindex meta tag if blocked by robots.txt Hash (#) URL Advantages: By using for links on Vehicle Listing pages to Vehicle Details pages (such as "Contact Seller" buttons), coupled with Javascript, crawler won't be able to follow/crawl these links. Best of both worlds: crawl budget isn't overtaxed by thousands of noindex pages, and internal links used to index robots.txt-disallowed pages are gone. Accomplishes same thing as "nofollowing" these links, but without looking like pagerank sculpting (?) Does not require complex Apache stuff Hash (#) URL Disdvantages: Is Google suspicious of sites with (some) internal links structured like this, since they can't crawl/follow them? Initially, we implemented robots.txt--the "sledgehammer solution." We figured that we'd have a happier crawler this way, as it wouldn't have to crawl zillions of partially duplicate vehicle details pages, and we wanted it to be like these pages didn't even exist. However, Google seems to be indexing many of these pages anyway, probably based on internal links pointing to them. We could nofollow the links pointing to these pages, but we don't want it to look like we're pagerank sculpting or something like that. If we implement noindex on these pages (and doing so is a difficult task itself), then we will be certain these pages aren't indexed. However, to do so we will have to remove the robots.txt disallowal, in order to let the crawler read the noindex tag on these pages. Intuitively, it doesn't make sense to me to make googlebot crawl zillions of vehicle details pages, all of which are noindexed, and it could easily get stuck/lost/etc. It seems like a waste of resources, and in some shadowy way bad for SEO. My developers are pushing for the third solution: using the hash URLs. This works on all hosts and keeps all functionality in the plugin self-contained (unlike noindex), and conserves crawl budget while keeping vehicle details page out of the index (unlike robots.txt). But I don't want Google to slap us 6-12 months from now because it doesn't like links like these (). Any thoughts or advice you guys have would be hugely appreciated, as I've been going in circles, circles, circles on this for a couple of days now. Also, I can provide a test site URL if you'd like to see the functionality in action.0 -

Tabs and duplicate content?

We own this site http://www.discountstickerprinting.co.uk/ and just a little concerned as I right clicked open in new tab on the tab content section and it went to a new page For example if you right click on the price tab and click open in new tab you will end up with the url

Intermediate & Advanced SEO | | BobAnderson

http://www.discountstickerprinting.co.uk/#tabThree Does this mean that our content is being duplicated onto another page? If so what should I do?0 -

Problems with ecommerce filters causing duplicate content.

We have an ecommerce website with 700 pages. Due to the implementation of filters, we are seeing upto 11,000 pages being indexed where the filter tag is apphended to the URL. This is causing duplicate content issues across the site. We tried adding "nofollow" to all the filters, we have also tried adding canonical tags, which it seems are being ignored. So how can we fix this? We are now toying with 2 other ideas to fix this issue; adding "no index" to all filtered pages making the filters uncrawble using javascript Has anyone else encountered this issue? If so what did you do to combat this and was it successful?

Intermediate & Advanced SEO | | Silkstream0 -

Duplicate content on sites from different countries

Hi, we have a client who currently has a lot of duplicate content with their UK and US website. Both websites are geographically targeted (via google webmaster tools) to their specific location and have the appropriate local domain extension. Is having duplicate content a major issue, since they are in two different countries and geographic regions of the world? Any statement from Google about this? Regards, Bill

Intermediate & Advanced SEO | | MBASydney0