Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Fetch as Google Desktop Render Width?

-

What is Google's minimum desktop responsive webpage width?

Fetch as Google for desktop is showing a skinnier version of our responsive page.

-

Clever PhD hit the nail on the head his answer Is excellent.

-

Howdy!

TLDR - I would estimate Google bot desktop to run at about about 980 pixels, but there is an easy way to test, just mess around with your site by adjusting the width of the browser and see if you can duplicate what you see in Google fetch and render.

http://www.w3schools.com/browsers/browsers_display.asp 97% of browsers have a width of 1024 or greater. Therefore, if you use that minimum of 1024, your width would be appropriate for pretty much everyone. That said, you might want to go with 980 as the width to account for things like scrolling bars and the fact that most people do not browse in full screen. This is a pretty standard starting point for width.

When you use fetch and render - Google uses one of it's bots depending on the type of page https://support.google.com/webmasters/answer/6066468?hl=en

When Google talks about responsive design https://developers.google.com/webmasters/mobile-sites/mobile-seo/responsive-design it notes, "When the meta viewport element is absent, mobile browsers default to rendering the page at a desktop screen width (usually about 980px, though this varies across devices)." In other words in some Google documentation they are giving a nod to the 980 pixels being a "standard desktop width"

Having that in mind, I would look at your site and see if you can tell if this jives. If you have setup the page to look "normal" at greater than 980 pixels, say 1200 pixels, set your width to 1200 pixels in your browser. Then play with the width of the browser and see if you can get it to match what you see in Google fetch and render. If your site looks the same as what you see in fetch and render and your browser is at 980 pixels, then you have a confirmation of the Googlebot desktop viewport size.

You could also setup a simple page and put several images on separate rows that are 950px 980px 1000px 1200px etc wide. Run fetch and render and see what happens, but I like my first suggestion better.

Have fun!

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Why does my Google Web Cache Redirects to My Homepage?

Why does my Google Webcache appears in a short period of time and then automatically redirects to my homepage? Is there something wrong with my robots.txt? The only files that I have blocked is below: User-agent: * Disallow: /bin/ Disallow: /common/ Disallow: /css/ Disallow: /download/ Disallow: /images/ Disallow: /medias/ Disallow: /ClientInfo.aspx Disallow: /*affiliateId* Disallow: /*referral*

Technical SEO | | Francis.Magos0 -

Fake Links indexing in google

Hello everyone, I have an interesting situation occurring here, and hoping maybe someone here has seen something of this nature or be able to offer some sort of advice. So, we recently installed a wordpress to a subdomain for our business and have been blogging through it. We added the google webmaster tools meta tag and I've noticed an increase in 404 links. I brought this up to or server admin, and he verified that there were a lot of ip's pinging our server looking for these links that don't exist. We've combed through our server files and nothing seems to be compromised. Today, we noticed that when you do site:ourdomain.com into google the subdomain with wordpress shows hundreds of these fake links, that when you visit them, return a 404 page. Just curious if anyone has seen anything like this, what it may be, how we can stop it, could it negatively impact us in anyway? Should we even worry about it? Here's the link to the google results. https://www.google.com/search?q=site%3Amshowells.com&oq=site%3A&aqs=chrome.0.69i59j69i57j69i58.1905j0j1&sourceid=chrome&es_sm=91&ie=UTF-8 (odd links show up on pages 2-3+)

Technical SEO | | mshowells0 -

Image Indexing Issue by Google

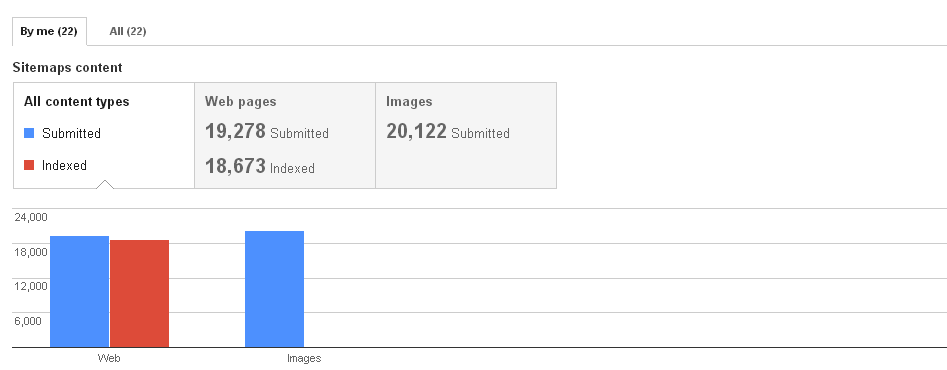

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

How to stop my webmail pages not to be indexed on Google ??

when i did a search in google for Site:mywebsite.com , for a list of pages indexed. Surprisingly the following come up " Webmail - Login " Although this is associated with the domain , this is a completely different server , this the rackspace email server browser interface I am sure that there is nothing on the website that links or points to this.

Technical SEO | | UIPL

So why is Google indexing it ? & how do I get it out of there. I tried in webmaster tool but I could not , as it seems like a sub-domain. Any ideas ? Thanks Naresh Sadasivan0 -

Google ranking my site abroad, how to stop?

Hi Mozzers, I have a UK based ecommerce site, that sells only to the UK. Over the last month Google has started ranking my site on foreign flavours of Google, so I keep getting traffic coming to my site from Europe, America and the far east that we could never sell to, and as a result bounce is going up and engagement is going down. They are definitely coming to the site from google searches that relate to my product type, but in regions I do not service. Is there a way to stop google doing this? I have the target set to UK in WMT, but is there anything else I can do? I worried about my UK ranking being damaged by an increasing overall bounce rate. Thanks

Technical SEO | | FDFPres0 -

Tags showing up in Google

Yesterday a user pointed out to me that Tags were being indexed in Google search results and that was not a good idea. I went into my Yoast settings and checked the "nofollow, index" in my Taxanomies, but when checking the source code for no follow, I found nothing. So instead, I went into the robot.txt and disallowed /tag/ Is that ok? or is that a bad idea? The site is The Tech Block for anyone interested in looking.

Technical SEO | | ttb0 -

Can Google read onClick links?

Can Google read and pass link juice in a link like this? <a <span="">href</a><a <span="">="#Link123" onClick="window.open('http://www.mycompany.com/example','Link123')">src="../../img/example.gif"/></a> Thanks!

Technical SEO | | jorgediaz0 -

Google Off/On Tags

I came across this article about telling google not to crawl a portion of a webpage, but I never hear anyone in the SEO community talk about them. http://perishablepress.com/press/2009/08/23/tell-google-to-not-index-certain-parts-of-your-page/ Does anyone use these and find them to be effective? If not, how do you suggest noindexing/canonicalizing a portion of a page to avoid duplicate content that shows up on multiple pages?

Technical SEO | | Hakkasan1