Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Fetch as Google temporarily lifting a penalty?

-

Hi, I was wondering if anyone has seen this behaviour before? I haven't!

We have around 20 sites and each one has lost all of its rankings (not in index at all) since the medic update apart from specifying a location on the end of a keyword.

I set to work trying to identify a common issue on each site, and began by improving speed issues in insights. On one site I realised that after I had improved the speed score and then clicked "fetch as google" the rankings for that site all returned within seconds.

I did the same for a different site and exactly the same result. Cue me jumping around the office in delight! The pressure is off, people's jobs are safe, have a cup of tea and relax.

Unfortunately this relief only lasted between 6-12 hours and then the rankings go again. To me it seems like what is happening is that the sites are all suffering from some kind of on page penalty which is lifted until the page can be assessed again and when it is the penalty is reapplied.

Not one to give up I set about methodically making changes until I found the issue. So far I have completely rewritten a site, reduced over use of keywords, added over 2000 words to homepage. Clicked fetch as google and the site came back - for 6 hours..... So then I gave the site a completely fresh redesign and again clicked fetch as google, and same result. Since doing all that, I have swapped over to https, 301 redirected etc and now the site is completely gone and won't come back after fetching as google. Uh!

So before I dig myself even deeper, has anyone any ideas?

Thanks.

-

Unfortunately it's going to be difficult to dig deeper into this without knowing the site - are you able to share the details?

I'm with Martijn that there should be no connection between these features. The only thing I have come up with that could plausibly cause anything like what you are seeing is something related to JavaScript execution (and this would not be a feature working as it's intended to work). We know that there is a delay between initial indexing and JavaScript indexing. It seems plausible to me that if there were a serious enough issue with the JS execution / indexing that either that step failed or that it made the site look spammy enough to get penalised that we could conceivably see the behaviour you describe - where it ranks until Google executes the JS.

I guess my first step to investigating this would be to look at the JS requirements on your site and consider the differences between with and without JS rendering (and if there is any issue with the chrome version that we know executes the JS render at Google's side).

Interested to hear if you discover anything more.

-

Hey, it’s good to have a fresh pair of eyes on it. There may something else as simple that I’ve missed.

Rankings check on my Mac, 2 x webservers via Remote Desktop in different locations, 2 x independent ranking checkers and they all show the same... recovery for 12ish hours, along with traffic and conversions. Then nothing.

When the rankings dissapear I can hit fetch as again straight away and they come straight back. If I leave it for days, then they’re gone for days, until I hit fetch then straight back.

cheers

-

Ok, that still doesn't mean that they're not personalized. But I'll skip on that part for now.

In the end, the changes that you're seeing aren't triggered by what you're doing with Fetch as Google. I'll leave it up to some others to see if they'll shine a light on the situation. -

Hi,

Thanks,

They’re not personalised as my ranking checkers don’t show personalised results

-

Hi,

I'm afraid I have to help you with this dream, there is no connection whatsoever between the rankings and the feature to Fetch as Google within Google Search Console. What likely is happening is that you're already getting personalized results and within a certain timeframe, the ads won't be shown as the results will be different as Google thinks that you've already seen the first results on the page the first time that you Googled this.

Fetch as Google doesn't provide any signal to the regular ranking engines to say: "Hey, we've fetched something new and now it's going to make an impact on this". Definitely not at the speed that you're describing (within seconds).

Martijn.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Google Not Indexing Pages (Wordpress)

Hello, recently I started noticing that google is not indexing our new pages or our new blog posts. We are simply getting a "Discovered - Currently Not Indexed" message on all new pages. When I click "Request Indexing" is takes a few days, but eventually it does get indexed and is on Google. This is very strange, as our website has been around since the late 90's and the quality of the new content is neither duplicate nor "low quality". We started noticing this happening around February. We also do not have many pages - maybe 500 maximum? I have looked at all the obvious answers (allowing for indexing, etc.), but just can't seem to pinpoint a reason why. Has anyone had this happen recently? It is getting very annoying having to manually go in and request indexing for every page and makes me think there may be some underlying issues with the website that should be fixed.

Technical SEO | | Hasanovic1 -

Favicon not showing in google serps

Hi, I have a website where the favicon is not showing in the google mobile serps. It's appearing the default icon instead (world icon). This is the tag I have place in the head section of the website: <link rel="shortcut icon" href="/favicon.ico" /> The size of the favicon is 48x48 and it's appearing correctly in the browser tag. I've checked that the google robot can crawl it and in the server logs I can see requests from the "Google Favicon" user-agent. Has anyone had this same problem? Any advice?

Technical SEO | | dMaLasp0 -

Why Google de-rank a website.

Hi, I was inspecting a website which is covering the topic of best wheelbarrow of 2021, it is a new website and and starts ranking on google. But, after few days it got de-rank automatically and Moz is also not showing any result to that. I was wandering why this just happened and what should I do if I made my website and will not face this kind of situation?

Technical SEO | | Moeen22330 -

How to: Sub headings appears in google results

I notice that many sites have sub headings appearing below their google results. http://i.imgur.com/A5JxMKD.png

Technical SEO | | kevinbp

See image for example. Q: what are these called? and how do we get them? A5JxMKD.png1 -

Is Google suppressing a page from results - if so why?

UPDATE: It seems the issue was that pages were accessible via multiple URLs (i.e. with and without trailing slash, with and without .aspx extension). Once this issue was resolved, pages started ranking again. Our website used to rank well for a keyword (top 5), though this was over a year ago now. Since then the page no longer ranks at all, but sub pages of that page rank around 40th-60th. I searched for our site and the term on Google (i.e. 'Keyword site:MySite.com') and increased the number of results to 100, again the page isn't in the results. However when I just search for our site (site:MySite.com) then the page is there, appearing higher up the results than the sub pages. I thought this may be down to keyword stuffing; there were around 20-30 instances of the keyword on the page, however roughly the same quantity of keywords were on each sub pages as well. I've now removed some of the excess keywords from all sections as it was getting in the way of usability as well, but I just wanted some thoughts on whether this is a likely cause or if there is something else I should be worried about.

Technical SEO | | Datel1 -

Why is my blog disappearing from Google index?

My Google blogger blog is about 10 months old. In that time i have worked really hard with adding unique content, building relationships with other bloggers in the same niche, and done some inbound marketing. 2 weeks ago I updated the template to something cleaner, with a little more "wordpress" feel to it. This means i've messed about with the code a lot in these weeks, adding social buttons etc. The problem is that from some point late last week thurs/fri my pages started disappearing from Googles index. I have checked webmaster tools and have no manual actions. My link profile is pretty clean as its a new site, and i have manually checked every piece of content published for plagiarism etc. So what is going on? Did i break my blog? Or is something else amiss? Impressions are down 96% comparing Nov 1-5th to previous 5 days. site is here: http://bit.ly/174beVm Thanks for any help in advance.

Technical SEO | | Silkstream0 -

Image Indexing Issue by Google

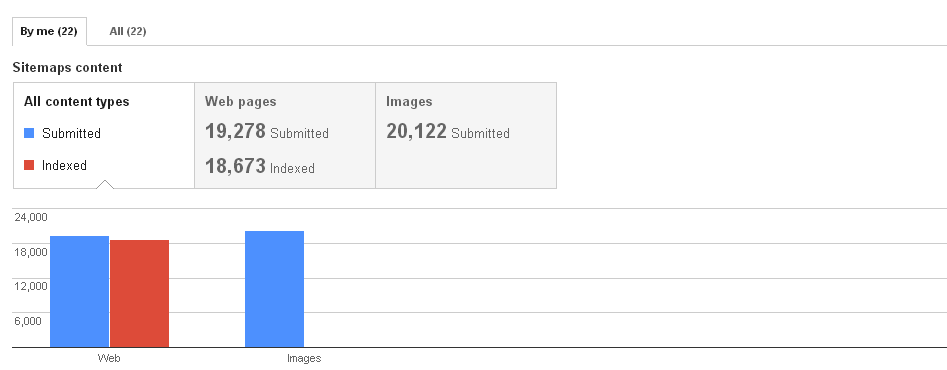

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

Ranking on google.com.au but not google.com

Hi there, we (www.refundfx.com.au) rank on google.com.au for some keywords that we target, but we do not rank at all on google.com, is that because we only use a .com.au domain and not a .com domain? We are an Australian company but our customers come from all over the world so we don't want to miss out on the google.com searches. Any help in this regard is appreciated. Thanks.

Technical SEO | | RefundFX0