Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Staging website got indexed by google

-

Our staging website got indexed by google and now MOZ is showing all inbound links from staging site, how should i remove those links and make it no index.

Note- we already added Meta NOINDEX in head tag

-

Hi Dera Moz My Domain Is 18 Years Old But Da is don't increased i don't know why can you please help me and check my url cigars please check sir

#mozda

-

Its good that you already put the Meta NOINDEX.

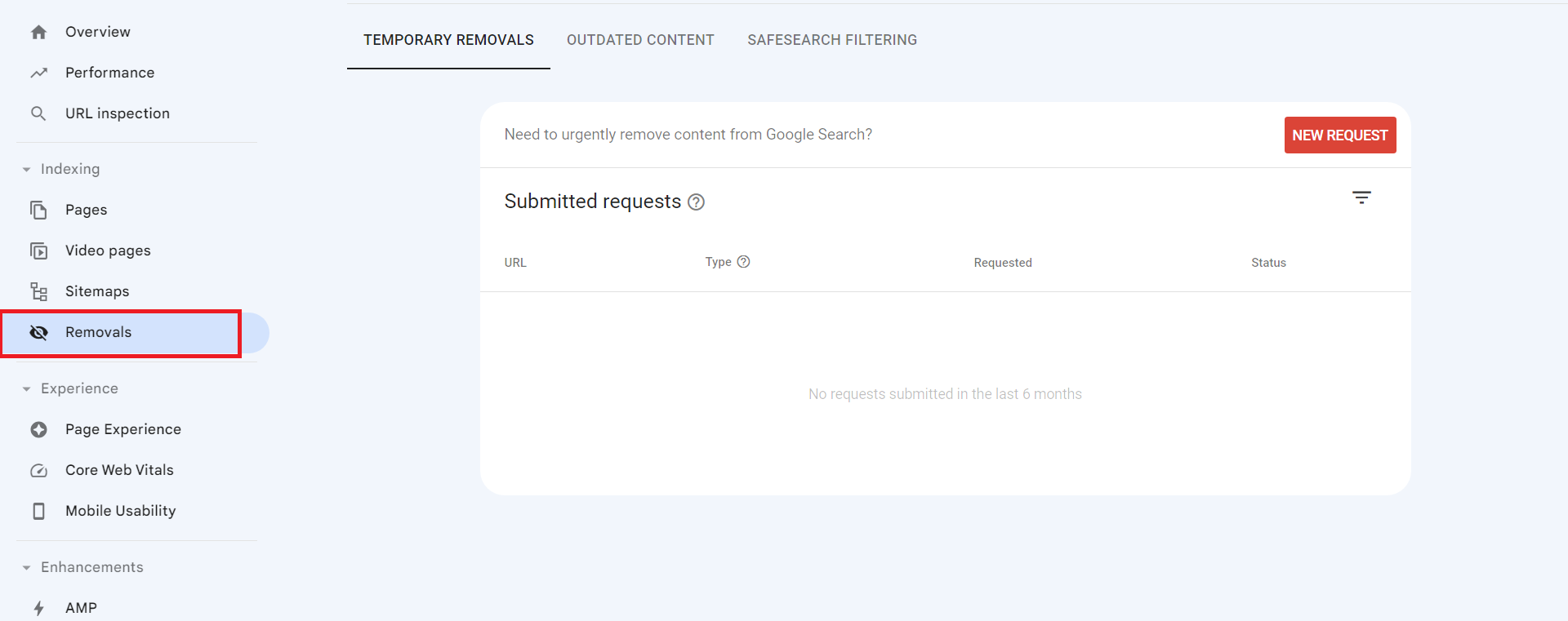

Now, you can ask to remove the url of website from google index. Visit the google search console and request the url removal.

You can use the URL Removal Tool in Google Search Console to request the removal of specific URLs from Google's index.

To use the URL Removal Tool, you can:

- Open the Removals tool.

- Select the Temporary Removals tab.

- Click New Request.

- Select Next to complete the process.

Warm Regards

Rahul Gupta

Suvidit Academy -

Sydney's Best Chauffeur Car Service | A1 Corporate Cars Au

Sydney's Best Chauffeur Car Service is a premier provider of corporate chauffeured cars in Sydney, Australia. We offer top-of [url=https://a1corporatecars.com.au/]corporate cars Australia[/url] transportation solutions for business professionals, executives, and VIP clients who demand the highest service and comfort. With a fleet of luxury vehicles and experienced professional chauffeurs, we ensure a seamless and luxurious travel experience for our esteemed customers.

-

If your staging website has been indexed by Google, it means that Google's web crawlers have discovered and added your staging site's pages to their search index. This is typically not desirable because staging websites are meant for testing and development purposes and often contain incomplete or confidential content.

To address this issue, you can take several steps. Firstly, ensure that your staging website has a "robots.txt" file configured properly. This file tells search engines which parts of your website to crawl and index. In the case of a staging site, you can disallow all web crawlers from indexing it by using a "robots.txt" file.

Another effective measure is to include a "noindex" meta tag in the HTML of your staging website's pages. This tag instructs search engines not to index the page, adding an extra layer of protection.

Consider password-protecting your staging website using HTTP authentication. This adds an additional layer of security and ensures that only authorized users can access the site.

To further mitigate indexing issues, you can set up your staging website on a subdomain or a subdirectory instead of a separate domain. Google is less likely to index staging content if it's located in a subdomain or subdirectory.

If your staging site is already indexed, you can request the removal of specific URLs from Google's index using the Google Search Console's URL Removal Tool. This is a more proactive approach to remove already indexed content.

Lastly, regularly monitor your staging website to ensure it remains hidden from search engines and that any changes to the robots.txt file or meta tags are being followed. It's a good practice to implement these measures before you create or launch a staging website to prevent it from being indexed in the first place.

Remember that it may take some time for Google to update its index and remove your staging site's pages. Be patient and continue to monitor the situation closely to ensure the desired results are achieved.

-

If a staging website (a non-production or testing version) gets indexed by Google, it can lead to privacy, user experience, and SEO issues. To address this, use methods like robots.txt, "noindex" meta tags, or password protection to prevent indexing. If already indexed, request removal through Google Search Console to ensure only the production site is visible in search results.

-

If your staging website has been indexed by Google, it means that Google's search engine has discovered and included your staging site in its search results. This is not an ideal situation since staging websites are usually intended for testing and development purposes, and you may not want them publicly accessible.

To address this issue, you can take a few steps:

Use a robots.txt file: Create a robots.txt file on your staging website and instruct search engines not to index it. This file specifies which areas of your site search engines should or should not crawl.

Add a noindex meta tag: Insert a "noindex" meta tag in the head section of your staging website's HTML. This tag tells search engines not to index that specific page.

Password protect your staging website: Implement password protection on your staging environment to ensure that only authorized users can access it. This can be done through various authentication methods, depending on your setup.

Remember that these steps can help prevent further indexing, but they may not immediately remove your staging site from the search results. It might take some time for search engines to re-crawl your site and recognize the changes you made.

-

If your staging website gets indexed by Google, you should take these steps:

( Atlantic Immigration Pilot Program application form)

Use a robots.txt file to disallow indexing.

Request removal of indexed pages via Google Search Console.

Canada PR

Add a "noindex, nofollow" meta tag to staging pages.

Consider password protecting the staging site.

Ensure canonical URLs point to the production site.

These actions will help prevent your incomplete or sensitive staging content from appearing in Google search results.

Best digital marketing agency -

If your staging website has been indexed by Google, it means that Google's search engine has crawled and added your staging site's pages to its search index. This is typically not desired because staging websites are not meant for public access and may contain incomplete or sensitive content.

To address this issue, you should take the following steps:

Disallow indexing: Use a robots.txt file to instruct search engines not to crawl and index your staging website. You can add the following lines to your robots.txt file to disallow all search engines:

makefile

Copy code

User-agent: *

Disallow: /

Place this robots.txt file in the root directory of your staging website.Remove indexed pages: You can request Google to remove indexed pages from its search results by using the Google Search Console's "Remove URLs" tool. Log in to your Google Search Console account, select your property, go to the "Index" section, and choose "Removals." From there, you can temporarily hide specific URLs from Google search results.

Use noindex meta tags: On your staging website's pages, you can add a meta tag to indicate that the page should not be indexed. Add the following meta tag within the HTML <head> section of each page you want to exclude:

html

Copy code

<meta name="robots" content="noindex, nofollow">

This tag tells search engines not to index the page or follow any links on it.Password protection: Consider adding password protection to your staging website, so only authorized users can access it. This adds an additional layer of security and privacy.

Update canonical URLs: Ensure that your staging website's canonical URLs (if used) point to the production website, not the staging one. This helps search engines understand the preferred version of your content.

After taking these steps, monitor your staging website to ensure it's no longer being indexed by Google. Keep in mind that it may take some time for changes to take effect and for Google to de-index your staging content.

-

@Asmi-Ta said in Staging website got indexed by google:

Our staging website got indexed by google and now MOZ is showing all inbound links from staging site, how should i remove those links and make it no index.

Note- we already added Meta NOINDEX in head tagTo remove indexed staging site links and prevent further indexing, take these steps: Add a "Disallow" rule for the staging site in your

robots.txtfile, use 301 redirects for indexed staging URLs to point to production, update all internal links to production URLs, request URL removals through Google Search Console's "Fetch as Google" and URL Removal Tool, submit an updated production sitemap, and monitor Google Search Console for updates. Be patient, as it may take time for search engines to de-index staging URLs and re-crawl your site. Ensure the staging site has a "noindex" tag in its<head>section.

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

The particular page cannot be indexed by Google

Hello, Smart People!

On-Page Optimization | | Viktoriia1805

We need help solving the problem with Google indexing.

All pages of our website are crawled and indexed. All pages, including those mentioned, meet Google requirements and can be indexed. However, only this page is still not indexed.

Robots.txt is not blocking it.

We do not have a tag "nofollow"

We have it in the sitemap file.

We have internal links for this page from indexed pages.

We requested indexing many times, and it is still grey.

The page was established one year ago.

We are open to any suggestions or guidance you may have. What else can we do to expedite the indexing process?1 -

Page Indexing without content

Hello. I have a problem of page indexing without content. I have website in 3 different languages and 2 of the pages are indexing just fine, but one language page (the most important one) is indexing without content. When searching using site: page comes up, but when searching unique keywords for which I should rank 100% nothing comes up. This page was indexing just fine and the problem arose couple of days ago after google update finished. Looking further, the problem is language related and every page in the given language that is newly indexed has this problem, while pages that were last crawled around one week ago are just fine. Has anyone ran into this type of problem?

Technical SEO | | AtuliSulava1 -

Who is correct - please help!

I have a website with a lot of product pages - often thousands of pages. As each of these pages is for a specific lease car they are often only fractionally different from other pages. The urls are too long, the H1 is often too long and the Title is often too long for "SEO best practice". And they do create duplication issues according to MOZ. Some people tell me to change them to noindex/nofollow whilst others tell me to leave them as they are as best not to hide from google crawler. Any advice will be gratefully received. Thanks for listening.

Technical SEO | | jlhitch0 -

Unsolved Crawling only the Home of my website

Hello,

Product Support | | Azurius

I don't understand why MOZ crawl only the homepage of our webiste https://www.modelos-de-curriculum.com We add the website correctly, and we asked for crawling all the pages. But the tool find only the homepage. Why? We are testing the tool before to suscribe. But we need to be sure that the tool is working for our website. If you can please help us.0 -

Should I "no-index" two exact pages on Google results?

Hello everyone, I recently started a new wordpress website and created a static homepage. I noticed that on Google search results, there are two different URLs landing on same content page. I've attached an image to explain what I saw. Should I "no-index" the page url? Google url.JPG In this picture, the first result is the homepage and I try to rank for that page. The last result is landing on same content with different URL. So, should I no-index last result as shown in image?

Technical SEO | | amanda59640 -

Does Google Index URLs that are always 302 redirected

Hello community Due to the architecture of our site, we have a bunch of URLs that are 302 redirected to the same URL plus a query string appended to it. For example: www.example.com/hello.html is 302 redirected to www.example.com/hello.html?___store=abc The www.example.com/hello.html?___store=abc page also has a link canonical tag to www.example.com/hello.html In the above example, can www.example.com/hello.html every be Indexed, by google as I assume the googlebot will always be redirected to www.example.com/hello.html?___store=abc and will never see www.example.com/hello.html ? Thanks in advance for the help!

Intermediate & Advanced SEO | | EcommRulz0 -

Will Google View Using Google Translate As Duplicate?

If I have a page in English, which exist on 100 other websites, we have a case where my website has duplicate content. What if I use Google Translate to translate the page from English to Japanese, as the only website doing this translation will my page get credit for producing original content? Or, will Google view my page as duplicate content, because Google can tell it is translated from an original English page, which runs on 100+ different websites, since Google Translate is Google's own software?

Intermediate & Advanced SEO | | khi50 -

XML Sitemap index within a XML sitemaps index

We have a similar problem to http://www.seomoz.org/q/can-a-xml-sitemap-index-point-to-other-sitemaps-indexes Can a XML sitemap index point to other sitemaps indexes? According to the "Unique Doll Clothing" example on this link, it seems possible http://www.seomoz.org/blog/multiple-xml-sitemaps-increased-indexation-and-traffic Can someone share an XML Sitemap index within a XML sitemaps index example? We are looking for the format to implement the same on our website.

Intermediate & Advanced SEO | | Lakshdeep0