Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Page Indexing without content

-

Hello.

I have a problem of page indexing without content. I have website in 3 different languages and 2 of the pages are indexing just fine, but one language page (the most important one) is indexing without content. When searching using site: page comes up, but when searching unique keywords for which I should rank 100% nothing comes up.

This page was indexing just fine and the problem arose couple of days ago after google update finished. Looking further, the problem is language related and every page in the given language that is newly indexed has this problem, while pages that were last crawled around one week ago are just fine.

Has anyone ran into this type of problem?

-

I've encountered a similar indexing issue on my website, https://sunasusa.com/. To resolve it, ensure that the language markup and content accessibility on the affected pages are correct. Review any recent changes and the quality of your content. Utilize Google Search Console for insights, or consider reaching out to Google support for assistance.

-

To remove hacked URLs in bulk from Google's index, clean up your website, secure it, and then use Google Search Console to request removal of the unwanted URLs. Additionally, submit a new sitemap containing only valid URLs to expedite re-indexing.

-

It seems that after a recent Google update, one language version of your website is experiencing indexing issues, while others remain unaffected. This could be due to factors like changes in algorithms or technical issues. To address this:

- Check h reflag tags and content quality.

- Review technical aspects like crawlability and indexing directives. Deck Services in Duluth GA

- Monitor Google Search Console for errors.

- Consider seeking expert assistance if needed.

-

@AtuliSulava Re: Website blog is hacked. Whats the best practice to remove bad urls

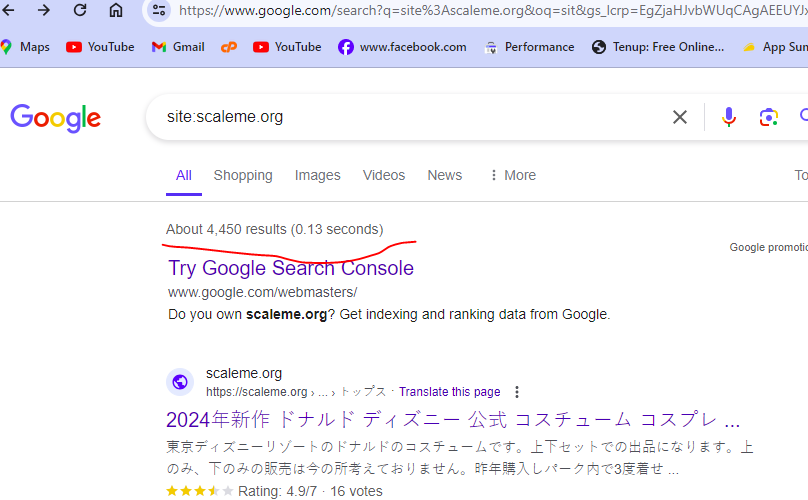

something similar problem also happened to me. many urls are indexed but they have no content in actual. My website(scaleme) was hacked and thousands of URLs with Japanese content were added to my site. These URLs are now indexed by Google. How can I remove them in bulk? (ScreenShoot attached)

I am the owner of this website. thousands of Japanese-language URLs (more than 4400) were added to my site. i am aware with google url remover tool but adding one by one url and submitting for removing is not possible because there are large number of url indexed by google.

Is there a way to find these url , downlaod a list and remove these URLs in bulk? is Moz have any tool to solve this problem?

-

I've faced a similar indexing issue on my website https://mobilespackages.in/ myself. To resolve it, ensure correct language markup and content accessibility on the affected pages. Review recent changes and content quality. Utilize Google Search Console for insights or reach out to Google support.

-

@AtuliSulava It sounds like you're experiencing a frustrating issue with your website's indexing. I have faced this issue. Unfortunately, I have prevented my website from indexing in google by mistake. Here are some steps you can take to troubleshoot and potentially resolve the problem:

Check Robots.txt: Ensure that your site's robots.txt file is not blocking search engine bots from accessing the content on the affected pages.

Review Meta Tags: Check the <meta name="robots" content="noindex"> tag on the affected pages. If present, remove it to allow indexing.

Content Accessibility: Make sure that the content on the affected pages is accessible to search engine bots. Check for any JavaScript, CSS, or other elements that might be blocking access to the content.

Canonical Tags: Verify that the canonical tags on the affected pages are correctly pointing to the preferred version of the page.

Structured Data Markup: Ensure that your pages have correct structured data markup to help search engines understand the content better.

Fetch as Google: Use Google Search Console's "Fetch as Google" tool to see how Googlebot sees your page and if there are any issues with rendering or accessing the content.

Monitor Google Search Console: Keep an eye on Google Search Console for any messages or issues related to indexing and crawlability of your site.

Wait for Re-crawl: Sometimes, Google's indexing issues resolve themselves over time as the search engine re-crawls and re-indexes your site. If the problem persists, consider requesting a re-crawl through Google Search Console.

If the issue continues, it might be beneficial to seek help from a professional SEO consultant who can perform a detailed analysis of your website and provide specific recommendations tailored to your situation. -

@AtuliSulava Perhaps indexing of blank pages is prohibited on this site, look for more information on how to check the ban on indexing in which site files...

Got a burning SEO question?

Subscribe to Moz Pro to gain full access to Q&A, answer questions, and ask your own.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Reputable Place For Guest Posts

We do our SEO in-house, and I don't have the time for blogger outreach. Does anyone know of a reputable place where I can submit our content to be pitched to relevant outlets for backlinks and US traffic? I am not in it for someone creating our content; I write it myself and have a degree in the content I produce. I am looking for a place that gets REAL US traffic, not some P.B.N. sites or those where I can create accounts at a post myself. I want real traffic from relevant, reputable blogs or a place where I can have them use my content and find niche sites for me.

Link Building | | tammysons2 -

What Tools Should I Use To Investigate Damage to my website

I would like to know what tools I should use and how to investigate damage to my website in2town.co.uk I hired a person to do some work to my website but they damaged it. That person was on a freelance platform and was removed because of all the complaints made about them. They also put in backdoors on websites including mine and added content. I also had a second problem where my content was being stolen. My site always did well and had lots of keywords in the top five and ten, but now they are not even in the top 200. This happened in January and feb. When I write unique articles, they are not showing in Google and need to find what the problem is and how to fix it. Can anyone please help

Technical SEO | | blogwoman10 -

Unsolved Why My site pages getting video index viewport issue?

Hello, I have been publishing a good number of blogs on my site Flooring Flow. Though, there's been an error of the video viewport on some of my articles. I have tried fixing it but the error is still showing in Google Search Console. Can anyone help me fix it out?

Technical SEO | | mitty270 -

Chat GPT

I want to get your thoughts on Chat GPT for creating articles on my site to drive SEO. Does Google approve of this type of content or not? I seems quite good quality - I suppose a key question also is: is it duplicate content? I have used on Propress website and also on blog sites so need to understand if this will reduce my rankings. Thanks

Content Development | | Katie231

Matthew1 -

Page with "random" content

Hi, I'm creating a page of 300+ in the near future, on which the content basicly will be unique as it can be. However, upon every refresh, also coming from a search engine refferer, i want the actual content such as listing 12 business to be displayed random upon every hit. So basicly we got 300+ nearby pages with unique content, and the overview of those "listings" as i might say, are being displayed randomly. Ive build an extensive script and i disabled any caching for PHP files in specific these pages, it works. But what about google? The content of the pages will still be as it is, it is more of the listings that are shuffled randomly to give every business listing a fair shot at a click and so on. Anyone experience with this? Ive tried a few things in the past, like a "Last update PHP Month" in the title which sometimes is'nt picked up very well.

Technical SEO | | Vanderlindemedia0 -

Upgrade old sitemap to a new sitemap index. How to do without danger ?

Hi MOZ users and friends. I have a website that have a php template developed by ourselves, and a wordpress blog in /blog/ subdirectory. Actually we have a sitemap.xml file in the root domain where are all the subsections and blog's posts. We upgrade manually the sitemap, once a month, adding the new posts created in the blog. I want to automate this process , so i created a sitemap index with two sitemaps inside it. One is the old sitemap without the blog's posts and a new one created with "Google XML Sitemap" wordpress plugin, inside the /blog/ subdirectory. That is, in the sitemap_index.xml file i have: Domain.com/sitemap.xml (old sitemap after remove blog posts urls) Domain.com/blog/sitemap.xml (auto-updatable sitemap create with Google XML plugin) Now i have to submit this sitemap index to Google Search Console, but i want to be completely sure about how to do this. I think that the only that i have to do is delete the old sitemap on Search Console and upload the new sitemap index, is it ok ?

Technical SEO | | ClaudioHeilborn0 -

Why google indexed pages are decreasing?

Hi, my website had around 400 pages indexed but from February, i noticed a huge decrease in indexed numbers and it is continually decreasing. can anyone help me to find out the reason. where i can get solution for that? will it effect my web page ranking ?

Technical SEO | | SierraPCB0 -

Should i index or noindex a contact page

Im wondering if i should noindex the contact page im doing SEO for a website just wondering if by noindexing the contact page would it help SEO or hurt SEO for that website

Technical SEO | | aronwp0